First of all, let me be clear: the headline of this article is a reference to Pete Warden's post, and should be read in the same way - as a caution against blind acceptance, rather than the wholesale condemnation of data visualisation.

An excellent blogpost has been receiving a lot of attention over the last week. Pete Warden, an experienced data scientist and author for O'Reilly on all things data, writes:

The wonderful thing about being a data scientist is that I get all of the credibility of genuine science, with none of the irritating peer review or reproducibility worries ... I thought I was publishing an entertaining view of some data I'd extracted, but it was treated like a scientific study.

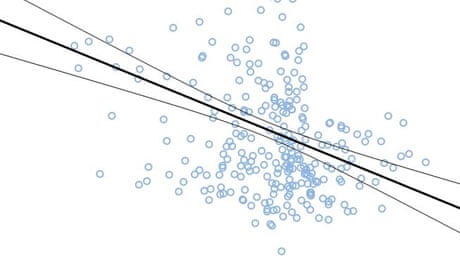

This is an important acknowledgement of a very real problem, but in my view Warden has the wrong target in his crosshairs. Data presented in any medium is a powerful tool and must be used responsibly, but it is when information is expressed visually that the risks are highest.

The central example Warden uses is his visualisation of Facebook friend networks across the United States, which proved extremely popular and was even cited in the New York Times as evidence for growing social division.

As he explains in his post, the methodology behind his underlying network graph is perfectly defensible, but the subsequent clustering process was "produced by me squinting at all the lines, coloring in some areas that seemed more connected in a paint program, and picking silly names for the areas". The exercise was only ever intended as a bit of fun with a large and interesting dataset, so there really shouldn't be any problem here.

But there is: humans are visual creatures. Peer-reviewed studies have shown that we can consume information more quickly when it is expressed in diagrams than when it is presented as text.

Even something as simple as colour scheme can have a marked impact on the perceived credibility of information presented visually - often a considerably more marked impact than the actual authority of the data source.

Another great example of this phenomenon was the Washington Post's 'map of the world's most and least racially tolerant countries', which went viral back in May of this year. It was widely accepted as an objective, scientific piece of work, despite a number of social scientists identifying flaws in the methodology and the underlying data itself.

Few will be surprised to know that while the original map has to date received over 80,000 shares on social media - not to mention republication on dozens of large news websites around the world - the various critiques have had less than 1% as many shares.

I am certainly in agreement with Warden that professional data scientists are more likely to have the data, tools and capabilities to design a compelling - and potentially misleading - data visualisation than the average member of public, but as both Warden and the racial intolerance map show, a visualisation does not need to be hand-coded by an experienced designer for millions to blindly accept its message as hard fact.

My experience as a data journalist here at the Guardian has also coloured my views in this debate, as our readers are far quicker to cry foul over data quoted in text than to question a visualisation that uses data of the same quality or from the same source.

In fact, I believe part of the problem with the automatic attachment of credibility to data visualisation comes about because of the way we encounter different forms of information presentation during our education. While text is frequently presented to students for critique, diagrams and data visualisations are overwhelmingly used simply as a medium of displaying final results.

The result is that reading text and thinking "I disagree with this" comes much more naturally to us than looking at a well-presented map or line graph and thinking the same.

Of course, we must also consider that there are problems wholly distinct from the visualisation process, some of which are particularly applicable to data science.

Data scientists are typically attached to technology firms, and are therefore disproportionately likely to analyse and visualise data that is not in the public domain, rendering it unavailable for interested parties to attempt to reproduce results or carry out analyses of their own.

Warden also makes some important points about the need to encourage greater openness from data scientists, and I include the paragraph below because he puts it better than I could:

What am I doing about it? I love efforts by teams like OpenPaths to break data out from proprietary silos so actual scientists can use them, and I do what I can to help any I run across. I popularize techniques that are common at startups, but lesser-known in academia. I'm excited when I see folks like Cameron Marlow at Facebook collaborating with academics to produce peer-reviewed research. I keep banging the drum about how shifty and feckless we data scientists really are, in the hope of damping down the starry-eyed credulity that greets our every pronouncement.

Lest I give the wrong impression, the same characteristic of data visualisation that makes it susceptible to misuse - its sheer efficiency in making the author's point - is at the same time its greatest strength.

A dataset that could otherwise take hours to unpick, and may simply cause many readers to switch off, can be brought to life, allowing rapid identification of trends and outliers. A story that would otherwise risk being labelled tl;dr can become an interactive journey.

It is a strange world we live in where analysts, data scientists, data journalists and any other data-handling group you care to name are faced with the dual battles of convincing the wider public to pay attention to important, rigorously researched studies, while at the same time holding our heads in our hands when the same people lap up error-strewn, intentionally misleading or bit-of-fun data visualisation with barely a thought for its veracity.

Ultimately, I believe the solution is a two-way street. First, anyone building a data visualisation must go to great lengths not only to link to sources and to fully explain any caveats relating to the data or graphic itself, but to state the degree to which their work should - or more importantly shouldn't - be taken as scientific fact. And second, we must better inform the wider public of the danger signs to watch out for before spreading what may turn out to be misinformation.

Where do you sit on this debate? Is it data scientists or data visualisations that are the most deserving of distrust, or do simply narrow your eyes when taking in any figures that haven't passed the peer review process? Leave a comment below or contact me directly at @jburnmurdoch

Comments (…)

Sign in or create your Guardian account to join the discussion