Although Close Quarters is primarily a multiplayer game, it must feature challenging AI bots so that players can still have fun when their internet connection is poor or there are no other players online. Bots will also play an important subsidiary role in some game modes. Hence, the bots must behave believably and manifest a range of complex behaviours, such as using cover, using items at opportune times, flanking, and throwing and escaping grenades.

The environment and the constraints:

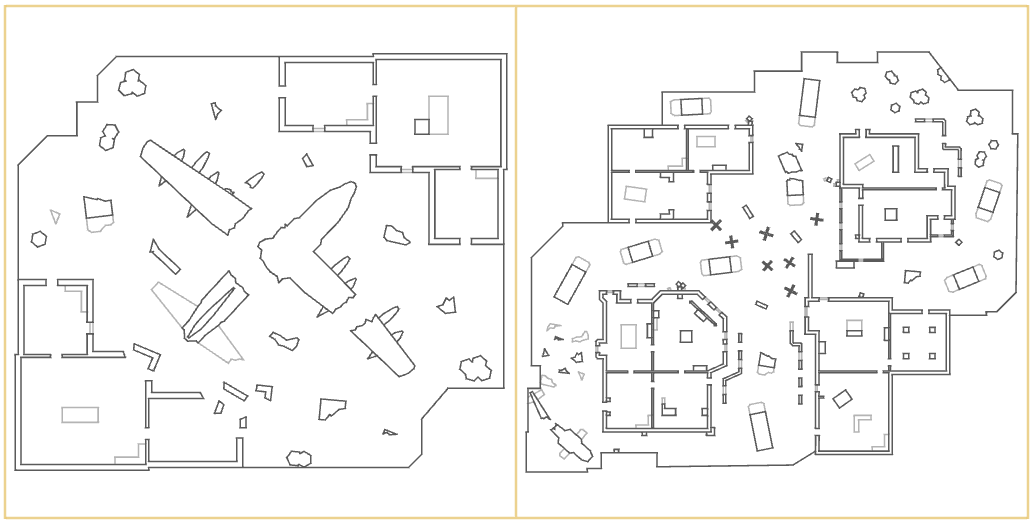

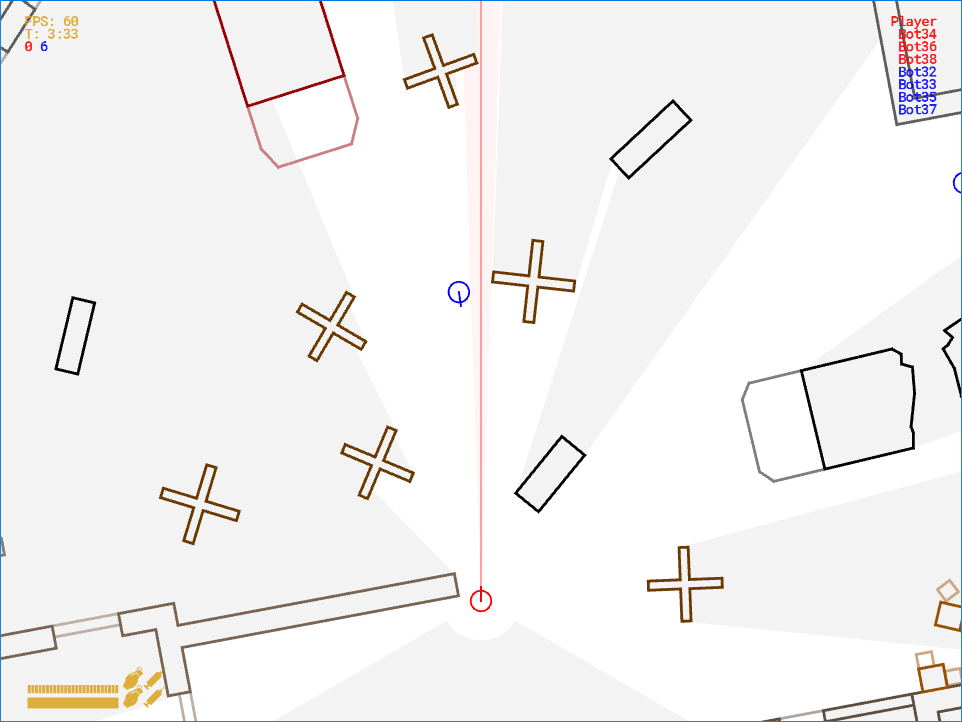

The game environments consist of polygons. Most polygons block movement, sight, and gunfire, though there are some “low” polygons that only block movement. The environments are densely packed with obstacles and cover options.

The AI is also constrained by several technical factors. Most importantly, the server–which runs bots when few players are online–must perform well on an inexpensive VPS with at least ten bots. Moreover, CPU usage should be low enough to run multiple server instances on the one VPS without maximizing the CPU allotment and thereby irritating the VPS provider.

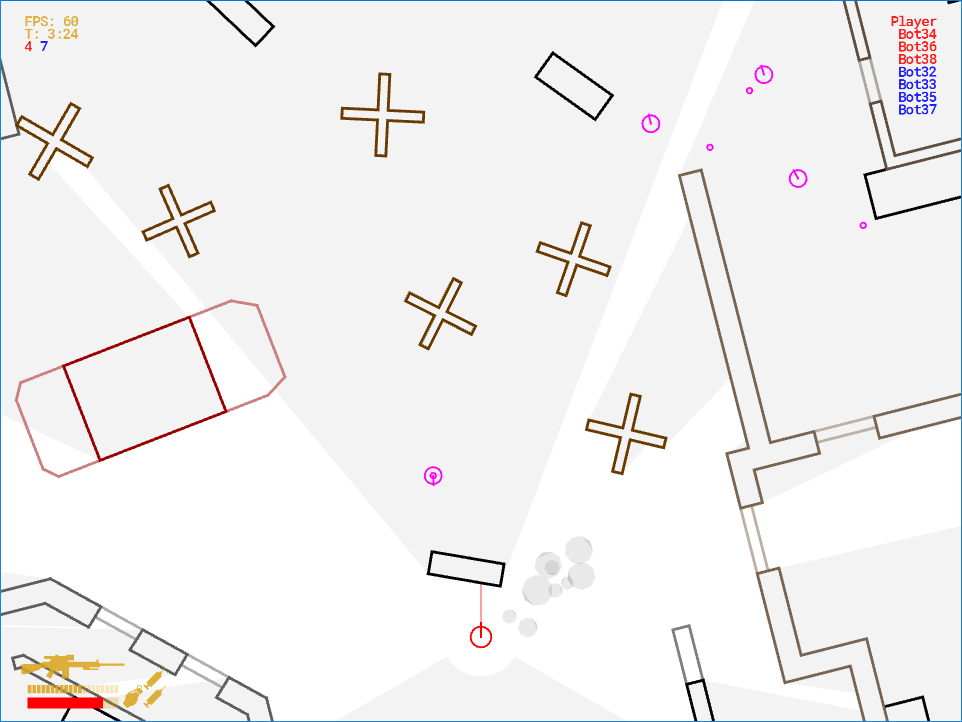

Figure 1: Environments

Figure 2: A player’s view with obstacles blocking sight (grey areas are obscured)

Navigation and visibility mesh and tactical pathfinding:

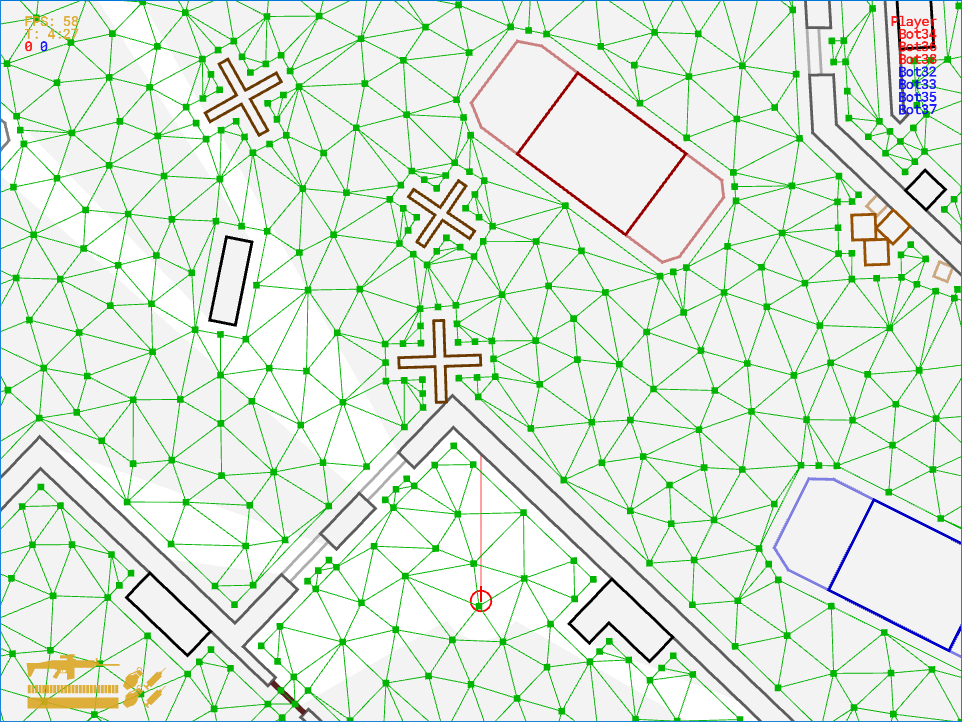

The bots rely on a dense navigation mesh consisting of discrete points. The mesh is generated by first expanding and combining the polygons constituting the environment. Extra nodes are added near corners as these locations are the most likely to offer sensible cover positions. The resulting walkable space is then triangulated to generate the mesh.

Figure 3: The navigation mesh

As the bots need to perform tactical pathfinding, the navigation mesh must hold visibility data that allows us to quickly test whether a node has cover from given enemy. Each node therefore contains an array of 24 bytes, each representing the precomputed approximate distance between the node and the nearest sight-blocking obstacle in a certain direction.

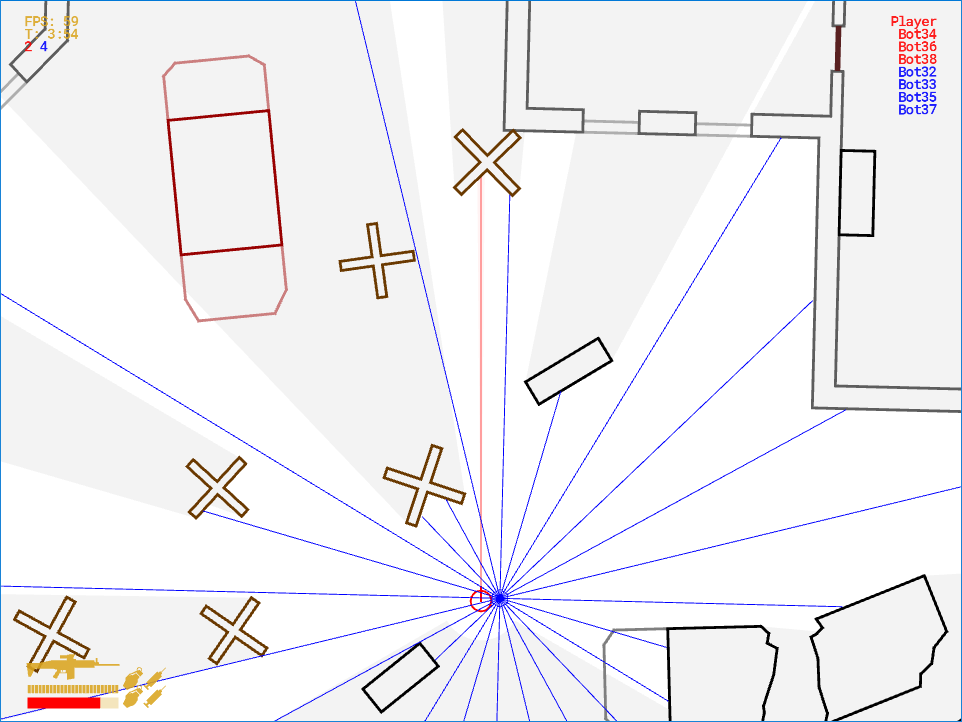

Figure 4: Visibility data (blue lines) stored in a node. Each line represents a one-byte value indicating visibility in a given direction.

With this data, it is possible to perform A* graph searches on the navigation mesh wherein nodes that are probably exposed to known enemies are penalized without performing many costly line-of-sight checks. To test if a given node is exposed to an enemy, we determine the direction and distance from the node to that enemy and then check whether that distance is lower than that stored in the array element corresponding most closely to that direction. Additionally, we may check whether the enemy is facing the node. When we apply a penalty multiplier to the traversal cost of exposed nodes, the resulting path tends to avoid such nodes.

The same visibility data can be used for other “tactical” actions besides pathfinding between two predetermined points. For example, a bot seeks cover by executing a breadth-first search and stopping once it finds a covered node. Actual line-of-sight checks are used to verify that a node that seems to provide cover actually does. Similarly, we can generate flanking attack paths by executing an A* search towards the target while heavily penalizing exposed nodes within its firing cone and stopping once we reach an exposed node outside of that firing cone (One problem with this approach is that bots left out of sight tend to constantly advance towards their target and therefore seem too aggressive, though it could perhaps be solved by adjusting the A* heuristic to guide the bot not directly towards the target but towards any nodes a preferred distance away from the target).

Bot senses and memory:

For the bots to behave believably, they must not be seen as “cheating”. In other words, the information a bot works with should be similar to the information a player would have in its place. For example, an enemy behind an obstacle should be hidden from the bot just as it would be hidden to a player.

There are two ways a player might discover an enemy’s position: they may see the enemy, or they may hear the enemy moving, shooting, or performing some other action.

Each bot maintains a list of known “facts” about enemies’ positions and orientations. These facts expire after ten seconds pass without updates. A fact pertaining to a given enemy is updated whenever the bot can see or hear that enemy. To simulate uncertainty, when a bot hears an enemy, the resulting position of the relevant fact is offset from the enemy’s actual position in a random direction and distance based on how close the sound was to the bot (see video at 1:28).

Figure 5: Facts (pink circles) in a bot’s memory

Behaviour tree:

In an earlier version of Close Quarters, the AI used STRIPS, an approach popularized by F.E.A.R. in 2005. In STRIPS, the relationships between different AI behaviours are not predetermined by the programmer. Rather, each behaviour contains a list of binary preconditions and outcomes. Each bot has a goal world state and then uses an A* graph search to find a sequence of behaviours that would achieve it. This approach worked well, but I felt that it was overkill for this application and better suited to AI that needs to develop elaborate plans involving many different behaviours. In most cases, I already knew the circumstances under which I wanted a bot to execute one behaviour or another, so using A* to determine that was an unnecessary waste of CPU resources.

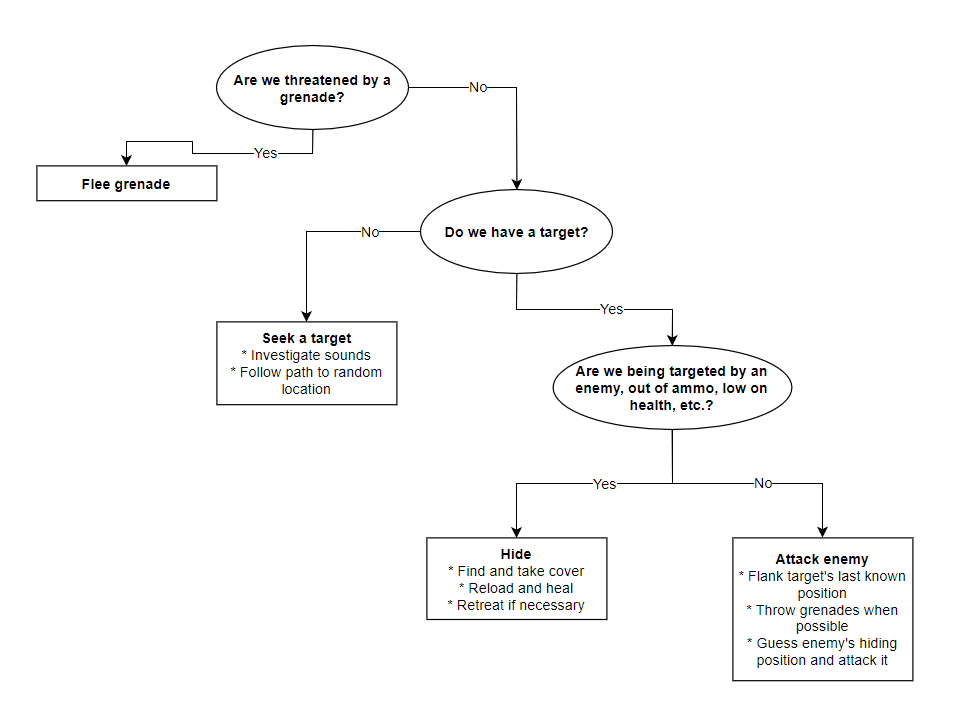

Hence, this time around the bots use a simple decision and behaviour tree. Each step, a bot traverses the tree from the root until it reaches a behaviour. If that behaviour is the one already being executed, the bot continues that behaviour. If not, the bot initiates the behaviour and begins running it.

Some behaviours can “block”, meaning that they will prevent the bot from re-traversing the tree until some condition is met. This is useful, for example, when ensuring that bots properly make it behind cover before deciding to attack. Behaviours can also “cancel” themselves, forcing a re-traversal of the tree and re-initiation of the behaviour. This is useful, for example, when a bot is fleeing a grenade and another grenade appears compromising the chosen flight positions.

Figure 6: The current decision and behaviour tree

Some secondary behaviours are coded within other, more general behaviours. For example, if a bot tries to attack an enemy and finds that the enemy is not in its suspected position, the bot estimates where the enemy might now be and calculates a new attack path without ever exiting the attack behaviour.

Distributing the load:

It is not necessary that every bot be updated every physics frame, i.e. 40 times per second. To reduce CPU usage, each bot only “thinks” 20 times per second (a figure that could be further reduced if necessary). Hence, on each physics step, the AI of only half the bots is updated.

Grenade handling:

One major challenge is having the bots use grenades intelligently. Grenade handling is much more complicated than shooting because grenades can be bounced off walls, have an area effect, and take time to throw. Luckily, the bots don’t need to use grenades perfectly, just sensibly.

Traditional approaches to this problem include precomputing grenade trajectories at navigation nodes, but doing so added several seconds to each map’s load time, which conflicts with my goal of having Close Quarters place players in a battle within a few seconds of opening the game.

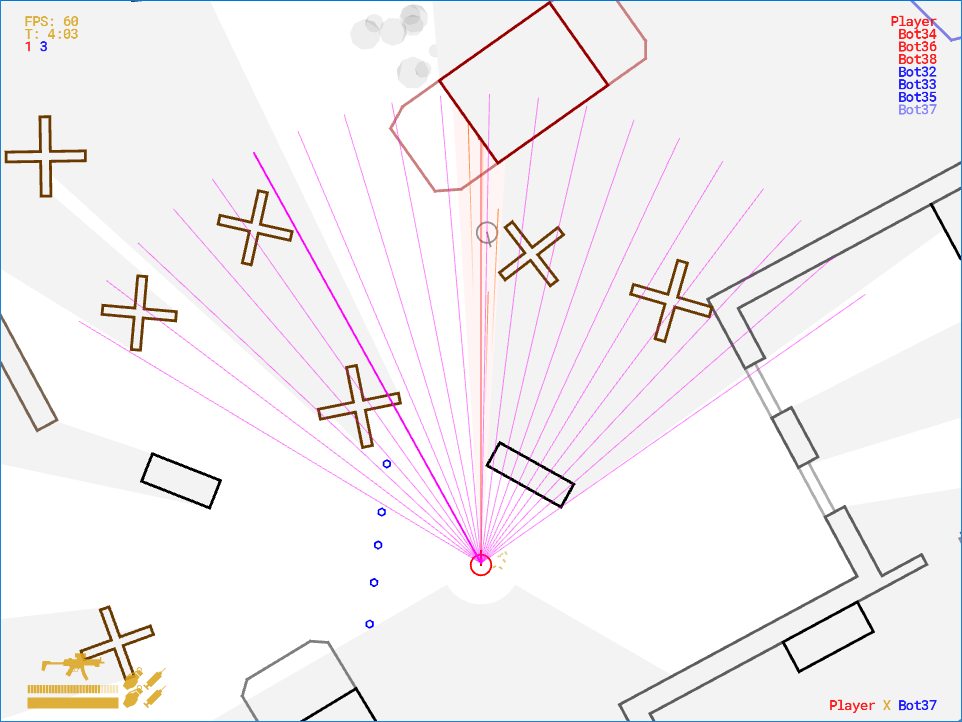

Hence, the bots look for opportunities to use grenades by calculating grenade trajectories on the fly. Each step, an attacking bot will test several viable trajectories in a given direction up to 60 degrees away from the direction to the intended target. If a grenade thrown along a trajectory tested might kill the target and the target is out of sight, the bot throws a grenade. The test direction is cycled each AI step.

Figure 7: The directions a bot will test over the course of one second (pale pink lines) and the trajectories tested (blue circles) along the direction selected in the current step (solid pink line).

The bots’ grenade use is therefore opportunistic as a bot will not move to a position specifically to throw a grenade. However, the path that a bot chooses to attack an enemy will often also be a sensible path from which to throw a grenade.

A significant drawback is that the limited number of directions tested means that bots will miss opportunities to bounce grenades off small obstacles. This is most noticeable around doorframes, where bots usually won’t recognize an opportunity to use a grenade. This issue could be addressed by testing multiple directions each frame and thereby reducing the angle between a test direction and the one that follows.

Towards more human behaviour:

One issue that quickly became evident was that bots were too quick on the trigger, so they were very difficult to beat in a one-on-one engagement. The average human reaction time to visual stimuli is 250 milliseconds, but at 20 steps per seconds, the maximum bot reaction time would only be 50 milliseconds!

To address this issue, I added an intentional delay between when a bot acquires a shot and when it shoots, bringing its reaction time insofar as shooting is concerned more in line with a human player’s reaction time.

Further development:

The above systems provide a strong foundational AI, but there is room for major improvements. For example, currently, a bot’s spatial reasoning is limited to its immediate environment, so while a bot usually tries to flank its enemy, it will often miss flanking paths around rather large obstacles. Similarly, bots are only roughly aware of their teammates’ presence, so they will sometimes clump up when it makes more sense to split up and flank.

Hence, the future devlogs in this series will cover:

- Squad-level AI, including coordinated flanking and suppressing fire, and higher-level spatial reasoning

- Deliberately assisting human teammates

- Following a human player’s orders

You can play the in-development version of Close Quarters here and follow its development on Reddit or Twitter.

This post was discussed here.

Note: This post was originally published in the Close Quarters Devlog, and is reproduced here with the kind permission of the original author.