As the power of artificial intelligence grows, the likelihood of a future war filled with killer robots grows as well. Proponents suggest that lethal autonomous weapon systems (LAWs) might cause less “collateral damage,” while critics warn that giving machines the power of life and death would be a terrible mistake.

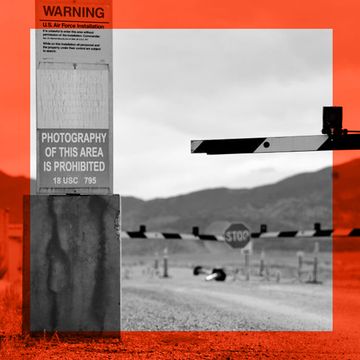

Last month’s UN meeting on ‘killer robots’ in Geneva ended with victory for the machines, as a small number of countries blocked progress towards an international ban. Some opponents of such a ban, like Russia and Israel, were to be expected since both nations already have advanced military AI programs. But surprisingly, the U.S. also agreed with them.

Picking Sides

In July, 2,400 researchers, including Elon Musk, signed a pledge not to work on robots that can attack without human oversight. Google faced a revolt by employees over an Artificial Intelligence program to help drones spot targets for the Pentagon, and decided not to continue with the work. KAIST, one of South Korea’s top universities, suffered an international academic boycott over its work on military robots until it too stopped work on them. Groups like the Campaign to Stop Killer Robots are becoming more visible, and Paul Scharre’s book Army of None, which details the dangers of autonomous weapons, has been hugely successful.

But the government’s argument is that any regulation would be premature, hindering new developments which would protect civilians. The Pentagon’s current policy is that there should always be a ‘man in the loop’ controlling any lethal system, but the submission from Washington to the recent UN meeting argued otherwise:

“Weapons that do what commanders and operators intend can effectuate their intentions to conduct operations in compliance with the law of war and to minimize harm to civilians.”

So the argument is that autonomous weapons would make more selective strikes that faulty human judgements would have botched.

“Most people don’t understand that these systems offer the opportunity to decide when not to fire, even when commanded by a human if it is deemed unethical,” says Professor Ron Arkin, a roboticist at the Georgia Institute of Technology.

Arkin suggests that autonomous weapons would be fitted with an “ethical governor” helping to ensure they only strike legitimate targets and avoid ambulances, hospitals, and other invalid targets.

Arkin has long argued for regulation rather than prohibition of LAWs. He points out that in modern warfare, precision-guided smart weapons are now seen as essential for avoiding civilian casualties. The use of unguided weapons in populated areas, like the barrel bombs dropped by the Syrian regime, looks like deliberate brutality.

Smarter is better, and an autonomous system might just be better than a human one.

Robotic Vision

The greatest promise for smarter machines comes from deep learning, an AI technique that feeds massive amounts of sample data to a neural network until it learns to make necessary distinctions.

In principle, deep learning might help distinguish between combatants and non-combatants, valid targets and invalid ones. Arkin warns that much more research needs to be conducted before fielding them in lethal systems, but there are already systems which can outmatch humans in recognition tasks.

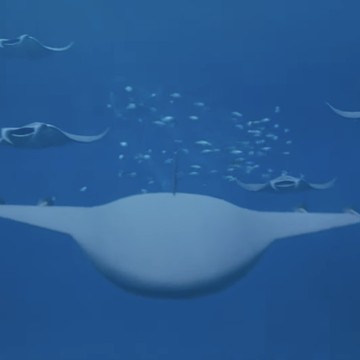

Australian beaches are now guarded by Little Ripper quadcopter drones equipped with an AI system known as SharkSpotter developed by the University of Technology in Sydney. This automatically scans the water for sharks and alerts the human operator when it sees something dangerous. SharkSpotter can identify humans, dolphin, boats, surfboards, rays, and objects in the water and tell them apart from sharks.

“The system can detect and identify around sixteen different objects with high accuracy. These advanced machine learning techniques significantly improve aerial detection accuracy to better than 90 percent,“ says UTS researcher Nabin Sharma. This compares to about 20-30 percent for a human operator looking at aerial imagery, though the SharkSpotter’s identification is still checked by a human before raising the alarm.

In combat, a drone operator squinting at a screen may struggle to tell whether people on the ground are insurgents with AK-47s or farmers with spades. Arkin says humans have a tendency toward “scenario fulfillment,” or seeing what we expect to see, and ignoring contradictory data in stressful situations. This effect contributed to the accidental shooting down of an Iranian airliner by the USS Vincennes in 1987.

“Robots can be developed so that they are not vulnerable to such patterns of behavior,” says Arkin.

At the very least, these AI-guided weapons would be better than current ‘smart bombs’ which lack any discrimination. On August 9th a laser-guided bomb from the Saudi coalition struck a bus full of schoolchildren in Yemen, killing forty of them.

“Recognition of a school bus could be relatively straightforward to implement if the bus is appropriately marked,” says Arkin. “There is no guarantee it would work under all conditions. But sometimes is better than never.”

A Flaw in the Machine

Noel Sharkey is professor of artificial intelligence and robotics at the University of Sheffield and chair of the International Committee for Robot Arms Control. As a leading voice against AI weapons, he remains unconvinced that AI would be an improvement over current weapons technology.

"After all of the hype about face recognition technologies, it turns out that they work really badly for women and darker shades of skin," says Sharkey. "And there are many adversarial tests showing how these technologies can be easily gamed or misled.”

This was demonstrated in 2017 when some MIT students found a way of fooling an image-recognition system into thinking a plastic turtle was a rifle.

Today current artificial intelligence cannot make better battlefield judgements better than humans, but AI is getting smarter, and one day they could theoretically help limit the loss of innocent lives caught in the crossfire.

“We cannot simply accept the current status quo with respect to noncombatant deaths,” says Arkin. “We should aim to do better.”

Sharkey disagrees that autonomous weapons are the tools that will eliminate collateral damage, referencing a principle known as Marten’s clause. This clause states that "the human person remains under the protection of the principles of humanity and the dictates of the public conscience.” This means that however well machines work, they should not be making life-and-death decisions in warfare.

“A prohibition treaty is urgently needed before massive international investment goes into LAWs,” says Sharkey. With DARPA announcing a new $2 billion investment in "next wave" military AI, time is running out.

The U.S. decision to back the development of ‘killer robots’ is a controversial one, and the argument is far from over. But if LAWs are fielded first, we may find out the hard way which side is right.