It's Not All About You: What Privacy Advocates Don't Get About Data Tracking on the Web

People condemn targeted advertising for its "creepiness" but the real issue is that we are giving private companies more power.

Jonathan Zittrain noted last summer, "If what you are getting online is for free, you are not the customer, you are the product." This is just a fact: The Internet of free platforms, free services and free content is wholly subsidized by targeted advertising, the efficacy (and thus profitability) of which relies on collecting and mining user data. We experience this commodification of our attention everyday in virtually everything we do online, whether it's searching, checking email, using Facebook or reading The Atlantic Technology section on this site. That is to say, right now you are a product.

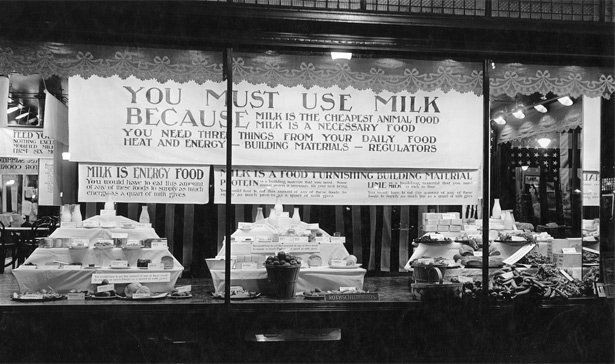

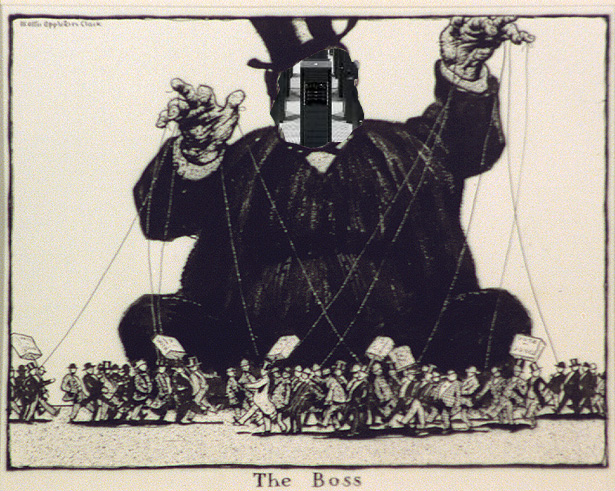

Most of us, myself included, have not come to terms with what it means to "be the product." In searching for a framework to make sense of this new dynamic, often we rely on well established pre-digital notions of privacy. The privacy discourse frames the issue in an ego-centric manner, as a bargain between consumers and companies: the company will know x, y and z about me and in exchange I get free email, good recommendations, and a plethora of convenient services. But the bargain that we are making is a collective one, and the costs will be felt at a societal scale. When we think in terms of power, it is clear we are getting a raw deal: we grant private entities -- with no interest in the public good and no public accountability -- greater powers of persuasion than anyone has ever had before and in exchange we get free email.

The privacy discourse is propelled by the "creepy" feeling of being under the gaze of an omniscient observer that one gets when they see targeted ads based on their data about their behavior. Charles Duhigg recently highlighted a prime example of this data-driven creepiness when he revealed that Target is able to mine purchasing behavior data to determine if a woman is pregnant, sometimes before she has even told her family. Fundamentally, people are uncomfortable with the feeling that entities know things about them that they didn't tell them, or at least that they didn't know they told them.

For many people the data-for-free-stuff deal is a bargain worth making. Proponents of this hyper-targeted world tell us to "learn to love" the targeting, after all we are merely being provided with ads for "stuff you would probably like to buy." Oh, I was just thinking I needed a new widget, and here is a link to a store that sells widgets! It's great, right? The problem is that, in aggregate, this knowledge is powerful and we are granting those who gather our data far more than we realize. These data-vores are doing more than trying to ensure that everyone looking for a widget buys it from them. No, they want to increase demand. Of course, increasing demand has always been one of the goals of advertising, but now they have even more power to do it.

Privacy critics worry about what Facebook, Google or Amazon knows about them, whether they will share that information or leak it, and maybe whether the government can get that information without a court order. While these concerns are legitimate, I think they are missing the broader point. Rather than caring about what they know about me, we should care about what they know about us. Detailed knowledge of individuals and their behavior coupled with the aggregate data on human behavior now available at unprecedented scale grants incredible power. Knowing about all of us - how we behave, how our behavior has changed over time, under what conditions our behavior is subject to change, and what factors are likely to impact our decision-making under various conditions - provides a roadmap for designing persuasive technologies. For the most part, the ethical implications of widespread deployment of persuasive technologies remains unexamined.

Using all of the trace data we leave in our digital wakes to target ads is known as "behavioral advertising." This is what target was doing to identify pregnant women, and what Amazon does with every user and every purchase. But behavioral advertisers do more than just use your past behavior to guess what you want. Their goal is actually to alter user behavior. Companies use extensive knowledge gleaned from innumerable micro-experiments and massive user behavior data over time to design their systems to elicit the monetizable behavior that their business models demand. At levels as granular as Google testing click-through rates on 41 different shades of blue, data-driven companies have learned how to channel your attention, initiate behavior, and keep you coming back.

Keen awareness of human behavior has taught them to harness fundamental desires and needs, short-circuiting feedback mechanisms with instant rewards. Think of the "gamification" which now proliferates online - nearly every platform has some sort of reward or reputation point system encouraging you to tell them more about yourself. Facebook, of course, leverages our innate desires -- autobiographical identity construction and the need for interpersonal social connection -- as a means of encouraging the self-disclosure from which they profit.

The persuasive power of these technologies is not overt. Indeed, the subtlety of the persuasion is part of their strength. People often react negatively if they get a sense of being "handled" or manipulated. (This sense is where the "creepiness" backlash comes from.) But the power is very real. Target, for instance, now sends coupon books with a subtle but very intentional emphasis on baby products to women who think they are pregnant, instead of more explicitly tailored offers that reveal how much the company knows.

Tech theorist Bruno Latour tells us that human action is mediated and "coshaped" by artifacts and material conditions. Artifacts present "scripts" that suggest behavior. The power to design these artifacts is, then, necessarily the power to influence action. The mundane example of Amazon.com illustrates this well:

The goal of this Web site is to persuade people to buy products again and again from Amazon.com. Everything on the Web site contributes to this result: user registration, tailored information, limited-time offers, third-party product reviews, one-click shopping, confirmation messages, and more. Dozens of persuasion strategies are integrated into the overall experience. Although the Amazon online experience may appear to be focused on providing mere information and seamless service, it is really about persuasion--buy things now and come back for more.

In some ways, this is just an update to the longstanding discussion in business ethics circles over the implications of persuasive advertising. Behavioral economics has shown that humans' cognitive biases can be exploited, so Roger Crisp has noted that subliminal and persuasive advertising undermines the autonomy of the consumer. And the advent of big-data and user-centered design has provided those who would persuade with a new and more powerful arsenal. This has led design ethicists to call for the explicit "moralization of technology," wherein designers would have to confront the ethical implications of the actions they shape.

There is another significant layer, which complicates the ethics of data and power. The data all of these firms collect is proprietary and closed. Analysis of human behavior from the greatest trove of data ever collected is limited to questions of how best to harvest clicks and turn a profit. Not that there is no merit to this, but only these private companies and the select few researchers they bless can study these phenomena at scale. Thus, industry outpaces academia, and the people building and implementing persuasive technologies know much more than the critics . The result is a fundamental information asymmetry. The data collectors have more information than those they are they are collecting the data from; the persuaders more power than the persuaded.

Judging whether this is good or bad depends on your framework for evaluating corporate behavior and the extent to which you trust the market as a force to prevent abuse. To be sure, there is a desire for the services that these companies offer and they are meeting a legitimate market demand. However, in a sector filled with large oligopolistic firms bolstered by network effects and opaque terms of service agreements laden with fine-print, there are legitimate reasons to question the efficacy of the market as a regulator of these issues.

A few things are certain, however. One is that the goals of the companies collecting the data are not necessarily the same as the goals of the people they are tracking. Another is that, as we establish norms for dealing with personal and behavioral data we should approach the issue with a full understanding of the scope of what's at stake. To understand the stakes, our critiques of ad tracking (and the fundamental asymmetries it creates) need to focus more on power and less on privacy.

The privacy framework tells us that we should feel violated by what they know about us. Understanding these issues in the context of power tells us that we should feel manipulated and controlled.

This piece was informed by discussions with James Williams, a doctoral candidate at the Oxford Internet Institute researching the ethical implications of persuasive technologies.