Rainbird: Realtime Analytics at Twitter (Strata 2011)

Download as KEY, PDF257 likes77,629 views

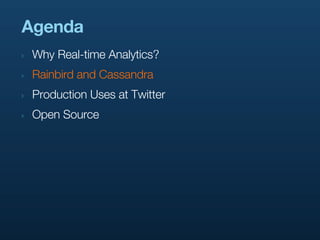

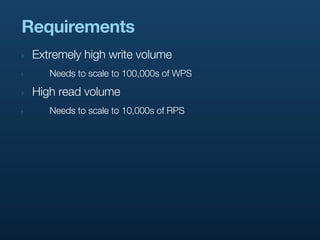

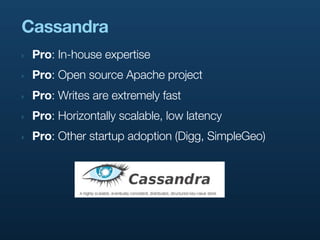

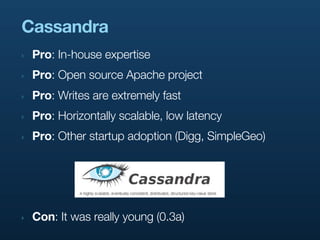

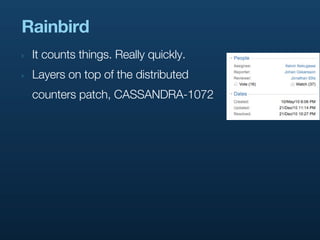

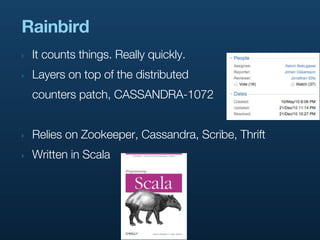

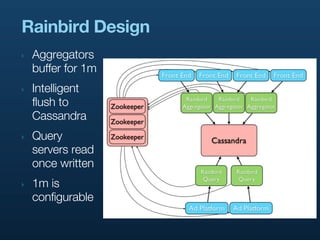

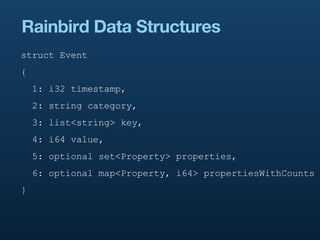

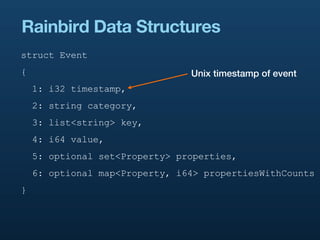

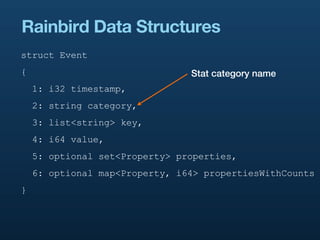

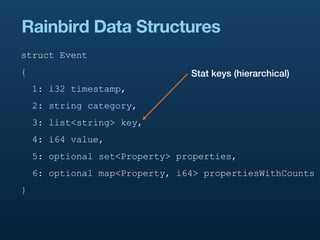

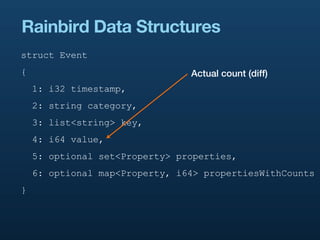

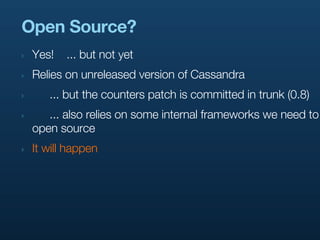

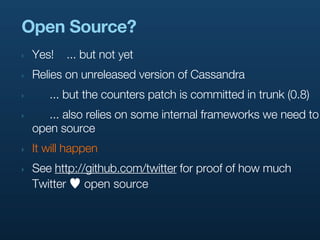

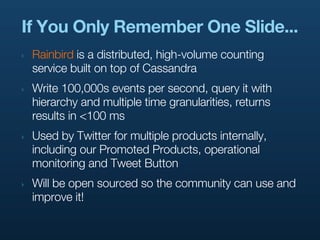

Introducing Rainbird, Twitter's high volume distributed counting service for realtime analytics, built on Cassandra. This presentation looks at the motivation, design, and uses of Rainbird across Twitter.

1 of 60

Downloaded 1,851 times

![Hierarchical Aggregation

‣ Say we’re counting Promoted Tweet impressions

‣ category = pti

‣ keys = [advertiser_id, campaign_id, tweet_id]

‣ count = 1

‣ Rainbird automatically increments the count for

‣ [advertiser_id, campaign_id, tweet_id]

‣ [advertiser_id, campaign_id]

‣ [advertiser_id]

‣ Means fast queries over each level of hierarchy

‣ Configurable in rainbird.conf, or dynamically via ZK](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-33-320.jpg)

![Hierarchical Aggregation

‣ Another example: tracking URL shortener tweets/clicks

‣ full URL = http://music.amazon.com/some_really_long_path

‣ keys = [com, amazon, music, full URL]

‣ count = 1

‣ Rainbird automatically increments the count for

‣ [com, amazon, music, full URL]

‣ [com, amazon, music]

‣ [com, amazon]

‣ [com]

‣ Means we can count clicks on full URLs

‣ And automatically aggregate over domains and subdomains!](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-34-320.jpg)

![Hierarchical Aggregation

‣ Another example: tracking URL shortener tweets/clicks

‣ full URL = http://music.amazon.com/some_really_long_path

‣ keys = [com, amazon, music, full URL]

‣ count = 1

‣ Rainbird automatically increments the count for

‣ [com, amazon, music, full URL]

‣ [com, amazon, music] How many people tweeted

‣ [com, amazon] full URL?

‣ [com]

‣ Means we can count clicks on full URLs

‣ And automatically aggregate over domains and subdomains!](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-35-320.jpg)

![Hierarchical Aggregation

‣ Another example: tracking URL shortener tweets/clicks

‣ full URL = http://music.amazon.com/some_really_long_path

‣ keys = [com, amazon, music, full URL]

‣ count = 1

‣ Rainbird automatically increments the count for

‣ [com, amazon, music, full URL]

‣ [com, amazon, music] How many people tweeted

‣ [com, amazon] any music.amazon.com URL?

‣ [com]

‣ Means we can count clicks on full URLs

‣ And automatically aggregate over domains and subdomains!](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-36-320.jpg)

![Hierarchical Aggregation

‣ Another example: tracking URL shortener tweets/clicks

‣ full URL = http://music.amazon.com/some_really_long_path

‣ keys = [com, amazon, music, full URL]

‣ count = 1

‣ Rainbird automatically increments the count for

‣ [com, amazon, music, full URL]

‣ [com, amazon, music] How many people tweeted

‣ [com, amazon] any amazon.com URL?

‣ [com]

‣ Means we can count clicks on full URLs

‣ And automatically aggregate over domains and subdomains!](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-37-320.jpg)

![Hierarchical Aggregation

‣ Another example: tracking URL shortener tweets/clicks

‣ full URL = http://music.amazon.com/some_really_long_path

‣ keys = [com, amazon, music, full URL]

‣ count = 1

‣ Rainbird automatically increments the count for

‣ [com, amazon, music, full URL]

‣ [com, amazon, music] How many people tweeted

‣ [com, amazon] any .com URL?

‣ [com]

‣ Means we can count clicks on full URLs

‣ And automatically aggregate over domains and subdomains!](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-38-320.jpg)

![Production Uses

‣ Internal Monitoring and Alerting

‣ We require operational reporting on all internal services

‣ Needs to be real-time, but also want longer-term

aggregates

‣ Hierarchical, too: [stat, datacenter, service, machine]](https://image.slidesharecdn.com/realtimeanalyticsattwitter-strata2011-110204123031-phpapp02/85/Rainbird-Realtime-Analytics-at-Twitter-Strata-2011-48-320.jpg)

Recommended

Unique ID generation in distributed systems by Dave Gardner, has 31 slides with 80053 views.The document discusses different strategies for generating unique IDs in a distributed system. It covers using auto-incrementing numeric IDs in MySQL, which are not resilient, and various solutions like UUIDs, Twitter Snowflake IDs, and Flickr ticket servers that generate IDs in a distributed and ordered way without coordination between data centers. It also provides code examples of generating Twitter Snowflake-like IDs in PHP without coordination using ZeroMQ.

Unique ID generation in distributed systemsDave Gardner

31 slides•80.1K views

The document discusses different strategies for generating unique IDs in a distributed system. It covers using auto-incrementing numeric IDs in MySQL, which are not resilient, and various solutions like UUIDs, Twitter Snowflake IDs, and Flickr ticket servers that generate IDs in a distributed and ordered way without coordination between data centers. It also provides code examples of generating Twitter Snowflake-like IDs in PHP without coordination using ZeroMQ.RocksDB Performance and Reliability Practices by Yoshinori Matsunobu, has 32 slides with 1354 views.Meta/Facebook's database serving social workloads is running on top of MyRocks (MySQL on RocksDB). This means our performance and reliability depends a lot on RocksDB. Not just MyRocks, but also we have other important systems running on top of RocksDB. We have learned many lessons from operating and debugging RocksDB at scale.

In this session, we will offer an overview of RocksDB, key differences from InnoDB, and share a few interesting lessons learned from production.

RocksDB Performance and Reliability PracticesYoshinori Matsunobu

32 slides•1.4K views

Meta/Facebook's database serving social workloads is running on top of MyRocks (MySQL on RocksDB). This means our performance and reliability depends a lot on RocksDB. Not just MyRocks, but also we have other important systems running on top of RocksDB. We have learned many lessons from operating and debugging RocksDB at scale.

In this session, we will offer an overview of RocksDB, key differences from InnoDB, and share a few interesting lessons learned from production.Using ClickHouse for Experimentation by Gleb Kanterov, has 33 slides with 13297 views.This document discusses using ClickHouse for experimentation and metrics at Spotify. It describes how Spotify built an experimentation platform using ClickHouse to provide teams interactive queries on granular metrics data with low latency. Key aspects include ingesting data from Google Cloud Storage to ClickHouse daily, defining metrics through a centralized catalog, and visualizing metrics and running queries using Superset connected to ClickHouse. The platform aims to reduce load on notebooks and BigQuery by serving common queries directly from ClickHouse.

Using ClickHouse for ExperimentationGleb Kanterov

33 slides•13.3K views

This document discusses using ClickHouse for experimentation and metrics at Spotify. It describes how Spotify built an experimentation platform using ClickHouse to provide teams interactive queries on granular metrics data with low latency. Key aspects include ingesting data from Google Cloud Storage to ClickHouse daily, defining metrics through a centralized catalog, and visualizing metrics and running queries using Superset connected to ClickHouse. The platform aims to reduce load on notebooks and BigQuery by serving common queries directly from ClickHouse.Real Time Analytics: Algorithms and Systems by Arun Kejariwal, has 180 slides with 23171 views.In this tutorial, an in-depth overview of streaming analytics -- applications, algorithms and platforms -- landscape is presented. We walk through how the field has evolved over the last decade and then discuss the current challenges -- the impact of the other three Vs, viz., Volume, Variety and Veracity, on Big Data streaming analytics.

Real Time Analytics: Algorithms and SystemsArun Kejariwal

180 slides•23.2K views

In this tutorial, an in-depth overview of streaming analytics -- applications, algorithms and platforms -- landscape is presented. We walk through how the field has evolved over the last decade and then discuss the current challenges -- the impact of the other three Vs, viz., Volume, Variety and Veracity, on Big Data streaming analytics. Introduction to Redis by Dvir Volk, has 24 slides with 129020 views.Redis is an in-memory key-value store that is often used as a database, cache, and message broker. It supports various data structures like strings, hashes, lists, sets, and sorted sets. While data is stored in memory for fast access, Redis can also persist data to disk. It is widely used by companies like GitHub, Craigslist, and Engine Yard to power applications with high performance needs.

Introduction to RedisDvir Volk

24 slides•129K views

Redis is an in-memory key-value store that is often used as a database, cache, and message broker. It supports various data structures like strings, hashes, lists, sets, and sorted sets. While data is stored in memory for fast access, Redis can also persist data to disk. It is widely used by companies like GitHub, Craigslist, and Engine Yard to power applications with high performance needs.Cassandra Introduction & Features by DataStax Academy, has 21 slides with 34517 views.This presentation shortly describes key features of Apache Cassandra. It was held at the Apache Cassandra Meetup in Vienna in January 2014. You can access the meetup here: http://www.meetup.com/Vienna-Cassandra-Users/

Cassandra Introduction & FeaturesDataStax Academy

21 slides•34.5K views

This presentation shortly describes key features of Apache Cassandra. It was held at the Apache Cassandra Meetup in Vienna in January 2014. You can access the meetup here: http://www.meetup.com/Vienna-Cassandra-Users/Introduction to Apache ZooKeeper by Saurav Haloi, has 30 slides with 139254 views.An introductory talk on Apache ZooKeeper at gnuNify - 2013 on 16th Feb '13, organized by Symbiosis Institute of Computer Science & Research, Pune IN

Introduction to Apache ZooKeeperSaurav Haloi

30 slides•139.3K views

An introductory talk on Apache ZooKeeper at gnuNify - 2013 on 16th Feb '13, organized by Symbiosis Institute of Computer Science & Research, Pune INWhat is in a Lucene index? by lucenerevolution, has 38 slides with 49070 views.Presented by Adrien Grand, Software Engineer, Elasticsearch

Although people usually come to Lucene and related solutions in order to make data searchable, they often realize that it can do much more for them. Indeed, its ability to handle high loads of complex queries make Lucene a perfect fit for analytics applications and, for some use-cases, even a credible replacement for a primary data-store. It is important to understand the design decisions behind Lucene in order to better understand the problems it can solve and the problems it cannot solve. This talk will explain the design decisions behind Lucene, give insights into how Lucene stores data on disk and how it differs from traditional databases. Finally, there will be highlights of recent and future changes in Lucene index file formats.

What is in a Lucene index?lucenerevolution

38 slides•49.1K views

Presented by Adrien Grand, Software Engineer, Elasticsearch

Although people usually come to Lucene and related solutions in order to make data searchable, they often realize that it can do much more for them. Indeed, its ability to handle high loads of complex queries make Lucene a perfect fit for analytics applications and, for some use-cases, even a credible replacement for a primary data-store. It is important to understand the design decisions behind Lucene in order to better understand the problems it can solve and the problems it cannot solve. This talk will explain the design decisions behind Lucene, give insights into how Lucene stores data on disk and how it differs from traditional databases. Finally, there will be highlights of recent and future changes in Lucene index file formats.Redpanda and ClickHouse by Altinity Ltd, has 14 slides with 904 views.Roko Kruze of vectorized.io describes real-time analytics using Redpanda event streams and ClickHouse data warehouse. 15 December 2021 SF Bay Area ClickHouse Meetup

Redpanda and ClickHouseAltinity Ltd

14 slides•904 views

Roko Kruze of vectorized.io describes real-time analytics using Redpanda event streams and ClickHouse data warehouse. 15 December 2021 SF Bay Area ClickHouse MeetupIntroduction to Redis by Arnab Mitra, has 31 slides with 13545 views.Redis is an open source, in-memory data structure store that can be used as a database, cache, or message broker. It supports data structures like strings, hashes, lists, sets, sorted sets with ranges and pagination. Redis provides high performance due to its in-memory storage and support for different persistence options like snapshots and append-only files. It uses client/server architecture and supports master-slave replication, partitioning, and failover. Redis is useful for caching, queues, and other transient or non-critical data.

Introduction to RedisArnab Mitra

31 slides•13.5K views

Redis is an open source, in-memory data structure store that can be used as a database, cache, or message broker. It supports data structures like strings, hashes, lists, sets, sorted sets with ranges and pagination. Redis provides high performance due to its in-memory storage and support for different persistence options like snapshots and append-only files. It uses client/server architecture and supports master-slave replication, partitioning, and failover. Redis is useful for caching, queues, and other transient or non-critical data.Introduction to memcached by Jurriaan Persyn, has 77 slides with 75654 views.Introduction to memcached, a caching service designed for optimizing performance and scaling in the web stack, seen from perspective of MySQL/PHP users. Given for 2nd year students of professional bachelor in ICT at Kaho St. Lieven, Gent.

Introduction to memcachedJurriaan Persyn

77 slides•75.7K views

Introduction to memcached, a caching service designed for optimizing performance and scaling in the web stack, seen from perspective of MySQL/PHP users. Given for 2nd year students of professional bachelor in ICT at Kaho St. Lieven, Gent.Big Data in Real-Time at Twitter by nkallen, has 71 slides with 139810 views.The document summarizes how Twitter handles and analyzes large amounts of real-time data, including tweets, timelines, social graphs, and search indices. It describes Twitter's original implementations using relational databases and the problems they encountered due to scale. It then discusses their current solutions, which involve partitioning the data across multiple servers, replicating and indexing the partitions, and pre-computing derived data when possible to enable low-latency queries. The principles discussed include exploiting locality, keeping working data in memory, and distributing computation across partitions to improve scalability and throughput.

Big Data in Real-Time at Twitternkallen

71 slides•139.8K views

The document summarizes how Twitter handles and analyzes large amounts of real-time data, including tweets, timelines, social graphs, and search indices. It describes Twitter's original implementations using relational databases and the problems they encountered due to scale. It then discusses their current solutions, which involve partitioning the data across multiple servers, replicating and indexing the partitions, and pre-computing derived data when possible to enable low-latency queries. The principles discussed include exploiting locality, keeping working data in memory, and distributing computation across partitions to improve scalability and throughput.Linux Kernel vs DPDK: HTTP Performance Showdown by ScyllaDB, has 31 slides with 1916 views.In this session I will use a simple HTTP benchmark to compare the performance of the Linux kernel networking stack with userspace networking powered by DPDK (kernel-bypass).

It is said that kernel-bypass technologies avoid the kernel because it is "slow", but in reality, a lot of the performance advantages that they bring just come from enforcing certain constraints.

As it turns out, many of these constraints can be enforced without bypassing the kernel. If the system is tuned just right, one can achieve performance that approaches kernel-bypass speeds, while still benefiting from the kernel's battle-tested compatibility, and rich ecosystem of tools.

Linux Kernel vs DPDK: HTTP Performance ShowdownScyllaDB

31 slides•1.9K views

In this session I will use a simple HTTP benchmark to compare the performance of the Linux kernel networking stack with userspace networking powered by DPDK (kernel-bypass).

It is said that kernel-bypass technologies avoid the kernel because it is "slow", but in reality, a lot of the performance advantages that they bring just come from enforcing certain constraints.

As it turns out, many of these constraints can be enforced without bypassing the kernel. If the system is tuned just right, one can achieve performance that approaches kernel-bypass speeds, while still benefiting from the kernel's battle-tested compatibility, and rich ecosystem of tools.Apache Druid 101 by Data Con LA, has 32 slides with 503 views.Data Con LA 2020

Description

Apache Druid is a cloud-native open-source database that enables developers to build highly-scalable, low-latency, real-time interactive dashboards and apps to explore huge quantities of data. This column-oriented database provides the microsecond query response times required for ad-hoc queries and programmatic analytics. Druid natively streams data from Apache Kafka (and more) and batch loads just about anything. At ingestion, Druid partitions data based on time so time-based queries run significantly faster than traditional databases, plus Druid offers SQL compatibility. Druid is used in production by AirBnB, Nielsen, Netflix and more for real-time and historical data analytics. This talk provides an introduction to Apache Druid including: Druid's core architecture and its advantages, Working with streaming and batch data in Druid, Querying data and building apps on Druid and Real-world examples of Apache Druid in action

Speaker

Matt Sarrel, Imply Data, Developer Evangelist

Apache Druid 101Data Con LA

32 slides•503 views

Data Con LA 2020

Description

Apache Druid is a cloud-native open-source database that enables developers to build highly-scalable, low-latency, real-time interactive dashboards and apps to explore huge quantities of data. This column-oriented database provides the microsecond query response times required for ad-hoc queries and programmatic analytics. Druid natively streams data from Apache Kafka (and more) and batch loads just about anything. At ingestion, Druid partitions data based on time so time-based queries run significantly faster than traditional databases, plus Druid offers SQL compatibility. Druid is used in production by AirBnB, Nielsen, Netflix and more for real-time and historical data analytics. This talk provides an introduction to Apache Druid including: Druid's core architecture and its advantages, Working with streaming and batch data in Druid, Querying data and building apps on Druid and Real-world examples of Apache Druid in action

Speaker

Matt Sarrel, Imply Data, Developer EvangelistNetflix Global Cloud Architecture by Adrian Cockcroft, has 59 slides with 72175 views.Latest version of Netflix Architecture presentation, variants presented several times during October 2012

Netflix Global Cloud ArchitectureAdrian Cockcroft

59 slides•72.2K views

Latest version of Netflix Architecture presentation, variants presented several times during October 2012Redis cluster by iammutex, has 17 slides with 7222 views.Redis Cluster is an approach to distributing Redis across multiple nodes. Key-value pairs are partitioned across nodes using consistent hashing on the key's hash slot. Nodes specialize as masters or slaves of data partitions for redundancy. Clients can query any node, which will redirect requests as needed. Nodes continuously monitor each other to detect and address failures, maintaining availability as long as each partition has at least one responsive node. The redis-trib tool is used to setup, check, resize, and repair clusters as needed.

Redis clusteriammutex

17 slides•7.2K views

Redis Cluster is an approach to distributing Redis across multiple nodes. Key-value pairs are partitioned across nodes using consistent hashing on the key's hash slot. Nodes specialize as masters or slaves of data partitions for redundancy. Clients can query any node, which will redirect requests as needed. Nodes continuously monitor each other to detect and address failures, maintaining availability as long as each partition has at least one responsive node. The redis-trib tool is used to setup, check, resize, and repair clusters as needed.Facebook Messages & HBase by 强 王, has 39 slides with 40056 views.The document discusses Facebook's use of HBase to store messaging data. It provides an overview of HBase, including its data model, performance characteristics, and how it was a good fit for Facebook's needs due to its ability to handle large volumes of data, high write throughput, and efficient random access. It also describes some enhancements Facebook made to HBase to improve availability, stability, and performance. Finally, it briefly mentions Facebook's migration of messaging data from MySQL to their HBase implementation.

Facebook Messages & HBase强 王

39 slides•40.1K views

The document discusses Facebook's use of HBase to store messaging data. It provides an overview of HBase, including its data model, performance characteristics, and how it was a good fit for Facebook's needs due to its ability to handle large volumes of data, high write throughput, and efficient random access. It also describes some enhancements Facebook made to HBase to improve availability, stability, and performance. Finally, it briefly mentions Facebook's migration of messaging data from MySQL to their HBase implementation.MyRocks Deep Dive by Yoshinori Matsunobu, has 152 slides with 25829 views.Detailed technical material about MyRocks -- RocksDB storage engine for MySQL -- https://github.com/facebook/mysql-5.6

MyRocks Deep DiveYoshinori Matsunobu

152 slides•25.8K views

Detailed technical material about MyRocks -- RocksDB storage engine for MySQL -- https://github.com/facebook/mysql-5.6Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud by Noritaka Sekiyama, has 60 slides with 34610 views.This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama

60 slides•34.6K views

This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.The Parquet Format and Performance Optimization Opportunities by Databricks, has 32 slides with 9317 views.The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

The Parquet Format and Performance Optimization OpportunitiesDatabricks

32 slides•9.3K views

The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.Whoops, The Numbers Are Wrong! Scaling Data Quality @ Netflix by DataWorks Summit, has 49 slides with 1400 views.Netflix is a famously data-driven company. Data is used to make informed decisions on everything from content acquisition to content delivery, and everything in-between. As with any data-driven company, it’s critical that data used by the business is accurate. Or, at worst, that the business has visibility into potential quality issues as soon as they arise. But even in the most mature data warehouses, data quality can be hard. How can we ensure high quality in a cloud-based, internet-scale, modern big data warehouse employing a variety of data engineering technologies?

In this talk, Michelle Ufford will share how the Data Engineering & Analytics team at Netflix is doing exactly that. We’ll kick things off with a quick overview of Netflix’s analytics environment, then dig into details of our data quality solution. We’ll cover what worked, what didn’t work so well, and what we plan to work on next. We’ll conclude with some tips and lessons learned for ensuring data quality on big data.

Whoops, The Numbers Are Wrong! Scaling Data Quality @ NetflixDataWorks Summit

49 slides•1.4K views

Netflix is a famously data-driven company. Data is used to make informed decisions on everything from content acquisition to content delivery, and everything in-between. As with any data-driven company, it’s critical that data used by the business is accurate. Or, at worst, that the business has visibility into potential quality issues as soon as they arise. But even in the most mature data warehouses, data quality can be hard. How can we ensure high quality in a cloud-based, internet-scale, modern big data warehouse employing a variety of data engineering technologies?

In this talk, Michelle Ufford will share how the Data Engineering & Analytics team at Netflix is doing exactly that. We’ll kick things off with a quick overview of Netflix’s analytics environment, then dig into details of our data quality solution. We’ll cover what worked, what didn’t work so well, and what we plan to work on next. We’ll conclude with some tips and lessons learned for ensuring data quality on big data.XStream: stream processing platform at facebook by Aniket Mokashi, has 22 slides with 603 views.XStream is Facebook's unified stream processing platform that provides a fully managed stream processing service. It was built using the Stylus C++ stream processing framework and uses a common SQL dialect called CoreSQL. XStream employs an interpretive execution model using the new Velox vectorized SQL evaluation engine for high performance. This provides a consistent and high efficiency stream processing platform to support diverse real-time use cases at planetary scale for Facebook.

XStream: stream processing platform at facebookAniket Mokashi

22 slides•603 views

XStream is Facebook's unified stream processing platform that provides a fully managed stream processing service. It was built using the Stylus C++ stream processing framework and uses a common SQL dialect called CoreSQL. XStream employs an interpretive execution model using the new Velox vectorized SQL evaluation engine for high performance. This provides a consistent and high efficiency stream processing platform to support diverse real-time use cases at planetary scale for Facebook.What's New in Apache Hive by DataWorks Summit, has 37 slides with 2170 views.Apache Hive is a rapidly evolving project which continues to enjoy great adoption in the big data ecosystem. As Hive continues to grow its support for analytics, reporting, and interactive query, the community is hard at work in improving it along with many different dimensions and use cases. This talk will provide an overview of the latest and greatest features and optimizations which have landed in the project over the last year. Materialized views, the extension of ACID semantics to non-ORC data, and workload management are some noteworthy new features.

We will discuss optimizations which provide major performance gains as well as integration with other big data technologies such as Apache Spark, Druid, and Kafka. The talk will also provide a glimpse of what is expected to come in the near future.

What's New in Apache HiveDataWorks Summit

37 slides•2.2K views

Apache Hive is a rapidly evolving project which continues to enjoy great adoption in the big data ecosystem. As Hive continues to grow its support for analytics, reporting, and interactive query, the community is hard at work in improving it along with many different dimensions and use cases. This talk will provide an overview of the latest and greatest features and optimizations which have landed in the project over the last year. Materialized views, the extension of ACID semantics to non-ORC data, and workload management are some noteworthy new features.

We will discuss optimizations which provide major performance gains as well as integration with other big data technologies such as Apache Spark, Druid, and Kafka. The talk will also provide a glimpse of what is expected to come in the near future.Netflix viewing data architecture evolution - QCon 2014 by Philip Fisher-Ogden, has 53 slides with 27386 views.Netflix's architecture for viewing data has evolved as streaming usage has grown. Each generation was designed for the next order of magnitude, and was informed by learnings from the previous. From SQL to NoSQL, from data center to cloud, from proprietary to open source, look inside to learn how this system has evolved. (from talk given at QConSF 2014)

Netflix viewing data architecture evolution - QCon 2014Philip Fisher-Ogden

53 slides•27.4K views

Netflix's architecture for viewing data has evolved as streaming usage has grown. Each generation was designed for the next order of magnitude, and was informed by learnings from the previous. From SQL to NoSQL, from data center to cloud, from proprietary to open source, look inside to learn how this system has evolved. (from talk given at QConSF 2014)Hardening Kafka Replication by confluent, has 232 slides with 5708 views.(Jason Gustafson, Confluent) Kafka Summit SF 2018

Kafka has a well-designed replication protocol, but over the years, we have found some extremely subtle edge cases which can, in the worst case, lead to data loss. We fixed the cases we were aware of in version 0.11.0.0, but shortly after that, another edge case popped up and then another. Clearly we needed a better approach to verify the correctness of the protocol. What we found is Leslie Lamport’s specification language TLA+.

In this talk I will discuss how we have stepped up our testing methodology in Apache Kafka to include formal specification and model checking using TLA+. I will cover the following:

1. How Kafka replication works

2. What weaknesses we have found over the years

3. How these problems have been fixed

4. How we have used TLA+ to verify the fixed protocol.

This talk will give you a deeper understanding of Kafka replication internals and its semantics. The replication protocol is a great case study in the complex behavior of distributed systems. By studying the faults and how they were fixed, you will have more insight into the kinds of problems that may lurk in your own designs. You will also learn a little bit of TLA+ and how it can be used to verify distributed algorithms.

Hardening Kafka Replication confluent

232 slides•5.7K views

(Jason Gustafson, Confluent) Kafka Summit SF 2018

Kafka has a well-designed replication protocol, but over the years, we have found some extremely subtle edge cases which can, in the worst case, lead to data loss. We fixed the cases we were aware of in version 0.11.0.0, but shortly after that, another edge case popped up and then another. Clearly we needed a better approach to verify the correctness of the protocol. What we found is Leslie Lamport’s specification language TLA+.

In this talk I will discuss how we have stepped up our testing methodology in Apache Kafka to include formal specification and model checking using TLA+. I will cover the following:

1. How Kafka replication works

2. What weaknesses we have found over the years

3. How these problems have been fixed

4. How we have used TLA+ to verify the fixed protocol.

This talk will give you a deeper understanding of Kafka replication internals and its semantics. The replication protocol is a great case study in the complex behavior of distributed systems. By studying the faults and how they were fixed, you will have more insight into the kinds of problems that may lurk in your own designs. You will also learn a little bit of TLA+ and how it can be used to verify distributed algorithms.SSD Deployment Strategies for MySQL by Yoshinori Matsunobu, has 52 slides with 18925 views.Slides for MySQL Conference & Expo 2010: http://en.oreilly.com/mysql2010/public/schedule/detail/13519

SSD Deployment Strategies for MySQLYoshinori Matsunobu

52 slides•18.9K views

Slides for MySQL Conference & Expo 2010: http://en.oreilly.com/mysql2010/public/schedule/detail/13519Apache Spark Architecture by Alexey Grishchenko, has 114 slides with 80638 views.This is the presentation I made on JavaDay Kiev 2015 regarding the architecture of Apache Spark. It covers the memory model, the shuffle implementations, data frames and some other high-level staff and can be used as an introduction to Apache Spark

Apache Spark ArchitectureAlexey Grishchenko

114 slides•80.6K views

This is the presentation I made on JavaDay Kiev 2015 regarding the architecture of Apache Spark. It covers the memory model, the shuffle implementations, data frames and some other high-level staff and can be used as an introduction to Apache SparkInteractive Analytics in Human Time by DataWorks Summit, has 34 slides with 6295 views.This document discusses Yahoo's approach to interactive analytics on human timescales for their large-scale advertising data warehouse. It describes how they ingest billions of daily events and terabytes of data, transform and store it using technologies like Druid and Storm, and perform real-time analytics like computing overlaps between user groups in under a minute. It also compares their "instant overlap" technique using feature sequences and bitmaps to existing approaches like exact computation and sketches.

Interactive Analytics in Human TimeDataWorks Summit

34 slides•6.3K views

This document discusses Yahoo's approach to interactive analytics on human timescales for their large-scale advertising data warehouse. It describes how they ingest billions of daily events and terabytes of data, transform and store it using technologies like Druid and Storm, and perform real-time analytics like computing overlaps between user groups in under a minute. It also compares their "instant overlap" technique using feature sequences and bitmaps to existing approaches like exact computation and sketches.Scalable Event Analytics with MongoDB & Ruby on Rails by Jared Rosoff, has 45 slides with 28384 views.The document discusses scaling event analytics applications using Ruby on Rails and MongoDB. It describes how the author's startup initially used a standard Rails architecture with MySQL, but ran into performance bottlenecks. It then explores solutions like replication, sharding, key-value stores and Hadoop, but notes the development challenges with each approach. The document concludes that using MongoDB provided scalable writes, flexible reporting and the ability to keep the application as "just a Rails app". MongoDB's sharding allows scaling to high concurrency levels with increased storage and transaction capacity across shards.

Scalable Event Analytics with MongoDB & Ruby on RailsJared Rosoff

45 slides•28.4K views

The document discusses scaling event analytics applications using Ruby on Rails and MongoDB. It describes how the author's startup initially used a standard Rails architecture with MySQL, but ran into performance bottlenecks. It then explores solutions like replication, sharding, key-value stores and Hadoop, but notes the development challenges with each approach. The document concludes that using MongoDB provided scalable writes, flexible reporting and the ability to keep the application as "just a Rails app". MongoDB's sharding allows scaling to high concurrency levels with increased storage and transaction capacity across shards.Analyzing Big Data at Twitter (Web 2.0 Expo NYC Sep 2010) by Kevin Weil, has 75 slides with 5465 views.A look at Twitter's data lifecycle, some of the tools we use to handle big data, and some of the questions we answer from our data.

Analyzing Big Data at Twitter (Web 2.0 Expo NYC Sep 2010)Kevin Weil

75 slides•5.5K views

A look at Twitter's data lifecycle, some of the tools we use to handle big data, and some of the questions we answer from our data.More Related Content

What's hot (20)

Redpanda and ClickHouse by Altinity Ltd, has 14 slides with 904 views.Roko Kruze of vectorized.io describes real-time analytics using Redpanda event streams and ClickHouse data warehouse. 15 December 2021 SF Bay Area ClickHouse Meetup

Redpanda and ClickHouseAltinity Ltd

14 slides•904 views

Roko Kruze of vectorized.io describes real-time analytics using Redpanda event streams and ClickHouse data warehouse. 15 December 2021 SF Bay Area ClickHouse MeetupIntroduction to Redis by Arnab Mitra, has 31 slides with 13545 views.Redis is an open source, in-memory data structure store that can be used as a database, cache, or message broker. It supports data structures like strings, hashes, lists, sets, sorted sets with ranges and pagination. Redis provides high performance due to its in-memory storage and support for different persistence options like snapshots and append-only files. It uses client/server architecture and supports master-slave replication, partitioning, and failover. Redis is useful for caching, queues, and other transient or non-critical data.

Introduction to RedisArnab Mitra

31 slides•13.5K views

Redis is an open source, in-memory data structure store that can be used as a database, cache, or message broker. It supports data structures like strings, hashes, lists, sets, sorted sets with ranges and pagination. Redis provides high performance due to its in-memory storage and support for different persistence options like snapshots and append-only files. It uses client/server architecture and supports master-slave replication, partitioning, and failover. Redis is useful for caching, queues, and other transient or non-critical data.Introduction to memcached by Jurriaan Persyn, has 77 slides with 75654 views.Introduction to memcached, a caching service designed for optimizing performance and scaling in the web stack, seen from perspective of MySQL/PHP users. Given for 2nd year students of professional bachelor in ICT at Kaho St. Lieven, Gent.

Introduction to memcachedJurriaan Persyn

77 slides•75.7K views

Introduction to memcached, a caching service designed for optimizing performance and scaling in the web stack, seen from perspective of MySQL/PHP users. Given for 2nd year students of professional bachelor in ICT at Kaho St. Lieven, Gent.Big Data in Real-Time at Twitter by nkallen, has 71 slides with 139810 views.The document summarizes how Twitter handles and analyzes large amounts of real-time data, including tweets, timelines, social graphs, and search indices. It describes Twitter's original implementations using relational databases and the problems they encountered due to scale. It then discusses their current solutions, which involve partitioning the data across multiple servers, replicating and indexing the partitions, and pre-computing derived data when possible to enable low-latency queries. The principles discussed include exploiting locality, keeping working data in memory, and distributing computation across partitions to improve scalability and throughput.

Big Data in Real-Time at Twitternkallen

71 slides•139.8K views

The document summarizes how Twitter handles and analyzes large amounts of real-time data, including tweets, timelines, social graphs, and search indices. It describes Twitter's original implementations using relational databases and the problems they encountered due to scale. It then discusses their current solutions, which involve partitioning the data across multiple servers, replicating and indexing the partitions, and pre-computing derived data when possible to enable low-latency queries. The principles discussed include exploiting locality, keeping working data in memory, and distributing computation across partitions to improve scalability and throughput.Linux Kernel vs DPDK: HTTP Performance Showdown by ScyllaDB, has 31 slides with 1916 views.In this session I will use a simple HTTP benchmark to compare the performance of the Linux kernel networking stack with userspace networking powered by DPDK (kernel-bypass).

It is said that kernel-bypass technologies avoid the kernel because it is "slow", but in reality, a lot of the performance advantages that they bring just come from enforcing certain constraints.

As it turns out, many of these constraints can be enforced without bypassing the kernel. If the system is tuned just right, one can achieve performance that approaches kernel-bypass speeds, while still benefiting from the kernel's battle-tested compatibility, and rich ecosystem of tools.

Linux Kernel vs DPDK: HTTP Performance ShowdownScyllaDB

31 slides•1.9K views

In this session I will use a simple HTTP benchmark to compare the performance of the Linux kernel networking stack with userspace networking powered by DPDK (kernel-bypass).

It is said that kernel-bypass technologies avoid the kernel because it is "slow", but in reality, a lot of the performance advantages that they bring just come from enforcing certain constraints.

As it turns out, many of these constraints can be enforced without bypassing the kernel. If the system is tuned just right, one can achieve performance that approaches kernel-bypass speeds, while still benefiting from the kernel's battle-tested compatibility, and rich ecosystem of tools.Apache Druid 101 by Data Con LA, has 32 slides with 503 views.Data Con LA 2020

Description

Apache Druid is a cloud-native open-source database that enables developers to build highly-scalable, low-latency, real-time interactive dashboards and apps to explore huge quantities of data. This column-oriented database provides the microsecond query response times required for ad-hoc queries and programmatic analytics. Druid natively streams data from Apache Kafka (and more) and batch loads just about anything. At ingestion, Druid partitions data based on time so time-based queries run significantly faster than traditional databases, plus Druid offers SQL compatibility. Druid is used in production by AirBnB, Nielsen, Netflix and more for real-time and historical data analytics. This talk provides an introduction to Apache Druid including: Druid's core architecture and its advantages, Working with streaming and batch data in Druid, Querying data and building apps on Druid and Real-world examples of Apache Druid in action

Speaker

Matt Sarrel, Imply Data, Developer Evangelist

Apache Druid 101Data Con LA

32 slides•503 views

Data Con LA 2020

Description

Apache Druid is a cloud-native open-source database that enables developers to build highly-scalable, low-latency, real-time interactive dashboards and apps to explore huge quantities of data. This column-oriented database provides the microsecond query response times required for ad-hoc queries and programmatic analytics. Druid natively streams data from Apache Kafka (and more) and batch loads just about anything. At ingestion, Druid partitions data based on time so time-based queries run significantly faster than traditional databases, plus Druid offers SQL compatibility. Druid is used in production by AirBnB, Nielsen, Netflix and more for real-time and historical data analytics. This talk provides an introduction to Apache Druid including: Druid's core architecture and its advantages, Working with streaming and batch data in Druid, Querying data and building apps on Druid and Real-world examples of Apache Druid in action

Speaker

Matt Sarrel, Imply Data, Developer EvangelistNetflix Global Cloud Architecture by Adrian Cockcroft, has 59 slides with 72175 views.Latest version of Netflix Architecture presentation, variants presented several times during October 2012

Netflix Global Cloud ArchitectureAdrian Cockcroft

59 slides•72.2K views

Latest version of Netflix Architecture presentation, variants presented several times during October 2012Redis cluster by iammutex, has 17 slides with 7222 views.Redis Cluster is an approach to distributing Redis across multiple nodes. Key-value pairs are partitioned across nodes using consistent hashing on the key's hash slot. Nodes specialize as masters or slaves of data partitions for redundancy. Clients can query any node, which will redirect requests as needed. Nodes continuously monitor each other to detect and address failures, maintaining availability as long as each partition has at least one responsive node. The redis-trib tool is used to setup, check, resize, and repair clusters as needed.

Redis clusteriammutex

17 slides•7.2K views

Redis Cluster is an approach to distributing Redis across multiple nodes. Key-value pairs are partitioned across nodes using consistent hashing on the key's hash slot. Nodes specialize as masters or slaves of data partitions for redundancy. Clients can query any node, which will redirect requests as needed. Nodes continuously monitor each other to detect and address failures, maintaining availability as long as each partition has at least one responsive node. The redis-trib tool is used to setup, check, resize, and repair clusters as needed.Facebook Messages & HBase by 强 王, has 39 slides with 40056 views.The document discusses Facebook's use of HBase to store messaging data. It provides an overview of HBase, including its data model, performance characteristics, and how it was a good fit for Facebook's needs due to its ability to handle large volumes of data, high write throughput, and efficient random access. It also describes some enhancements Facebook made to HBase to improve availability, stability, and performance. Finally, it briefly mentions Facebook's migration of messaging data from MySQL to their HBase implementation.

Facebook Messages & HBase强 王

39 slides•40.1K views

The document discusses Facebook's use of HBase to store messaging data. It provides an overview of HBase, including its data model, performance characteristics, and how it was a good fit for Facebook's needs due to its ability to handle large volumes of data, high write throughput, and efficient random access. It also describes some enhancements Facebook made to HBase to improve availability, stability, and performance. Finally, it briefly mentions Facebook's migration of messaging data from MySQL to their HBase implementation.MyRocks Deep Dive by Yoshinori Matsunobu, has 152 slides with 25829 views.Detailed technical material about MyRocks -- RocksDB storage engine for MySQL -- https://github.com/facebook/mysql-5.6

MyRocks Deep DiveYoshinori Matsunobu

152 slides•25.8K views

Detailed technical material about MyRocks -- RocksDB storage engine for MySQL -- https://github.com/facebook/mysql-5.6Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud by Noritaka Sekiyama, has 60 slides with 34610 views.This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama

60 slides•34.6K views

This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.The Parquet Format and Performance Optimization Opportunities by Databricks, has 32 slides with 9317 views.The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

The Parquet Format and Performance Optimization OpportunitiesDatabricks

32 slides•9.3K views

The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.Whoops, The Numbers Are Wrong! Scaling Data Quality @ Netflix by DataWorks Summit, has 49 slides with 1400 views.Netflix is a famously data-driven company. Data is used to make informed decisions on everything from content acquisition to content delivery, and everything in-between. As with any data-driven company, it’s critical that data used by the business is accurate. Or, at worst, that the business has visibility into potential quality issues as soon as they arise. But even in the most mature data warehouses, data quality can be hard. How can we ensure high quality in a cloud-based, internet-scale, modern big data warehouse employing a variety of data engineering technologies?

In this talk, Michelle Ufford will share how the Data Engineering & Analytics team at Netflix is doing exactly that. We’ll kick things off with a quick overview of Netflix’s analytics environment, then dig into details of our data quality solution. We’ll cover what worked, what didn’t work so well, and what we plan to work on next. We’ll conclude with some tips and lessons learned for ensuring data quality on big data.

Whoops, The Numbers Are Wrong! Scaling Data Quality @ NetflixDataWorks Summit

49 slides•1.4K views

Netflix is a famously data-driven company. Data is used to make informed decisions on everything from content acquisition to content delivery, and everything in-between. As with any data-driven company, it’s critical that data used by the business is accurate. Or, at worst, that the business has visibility into potential quality issues as soon as they arise. But even in the most mature data warehouses, data quality can be hard. How can we ensure high quality in a cloud-based, internet-scale, modern big data warehouse employing a variety of data engineering technologies?

In this talk, Michelle Ufford will share how the Data Engineering & Analytics team at Netflix is doing exactly that. We’ll kick things off with a quick overview of Netflix’s analytics environment, then dig into details of our data quality solution. We’ll cover what worked, what didn’t work so well, and what we plan to work on next. We’ll conclude with some tips and lessons learned for ensuring data quality on big data.XStream: stream processing platform at facebook by Aniket Mokashi, has 22 slides with 603 views.XStream is Facebook's unified stream processing platform that provides a fully managed stream processing service. It was built using the Stylus C++ stream processing framework and uses a common SQL dialect called CoreSQL. XStream employs an interpretive execution model using the new Velox vectorized SQL evaluation engine for high performance. This provides a consistent and high efficiency stream processing platform to support diverse real-time use cases at planetary scale for Facebook.

XStream: stream processing platform at facebookAniket Mokashi

22 slides•603 views

XStream is Facebook's unified stream processing platform that provides a fully managed stream processing service. It was built using the Stylus C++ stream processing framework and uses a common SQL dialect called CoreSQL. XStream employs an interpretive execution model using the new Velox vectorized SQL evaluation engine for high performance. This provides a consistent and high efficiency stream processing platform to support diverse real-time use cases at planetary scale for Facebook.What's New in Apache Hive by DataWorks Summit, has 37 slides with 2170 views.Apache Hive is a rapidly evolving project which continues to enjoy great adoption in the big data ecosystem. As Hive continues to grow its support for analytics, reporting, and interactive query, the community is hard at work in improving it along with many different dimensions and use cases. This talk will provide an overview of the latest and greatest features and optimizations which have landed in the project over the last year. Materialized views, the extension of ACID semantics to non-ORC data, and workload management are some noteworthy new features.

We will discuss optimizations which provide major performance gains as well as integration with other big data technologies such as Apache Spark, Druid, and Kafka. The talk will also provide a glimpse of what is expected to come in the near future.

What's New in Apache HiveDataWorks Summit

37 slides•2.2K views

Apache Hive is a rapidly evolving project which continues to enjoy great adoption in the big data ecosystem. As Hive continues to grow its support for analytics, reporting, and interactive query, the community is hard at work in improving it along with many different dimensions and use cases. This talk will provide an overview of the latest and greatest features and optimizations which have landed in the project over the last year. Materialized views, the extension of ACID semantics to non-ORC data, and workload management are some noteworthy new features.

We will discuss optimizations which provide major performance gains as well as integration with other big data technologies such as Apache Spark, Druid, and Kafka. The talk will also provide a glimpse of what is expected to come in the near future.Netflix viewing data architecture evolution - QCon 2014 by Philip Fisher-Ogden, has 53 slides with 27386 views.Netflix's architecture for viewing data has evolved as streaming usage has grown. Each generation was designed for the next order of magnitude, and was informed by learnings from the previous. From SQL to NoSQL, from data center to cloud, from proprietary to open source, look inside to learn how this system has evolved. (from talk given at QConSF 2014)

Netflix viewing data architecture evolution - QCon 2014Philip Fisher-Ogden

53 slides•27.4K views

Netflix's architecture for viewing data has evolved as streaming usage has grown. Each generation was designed for the next order of magnitude, and was informed by learnings from the previous. From SQL to NoSQL, from data center to cloud, from proprietary to open source, look inside to learn how this system has evolved. (from talk given at QConSF 2014)Hardening Kafka Replication by confluent, has 232 slides with 5708 views.(Jason Gustafson, Confluent) Kafka Summit SF 2018

Kafka has a well-designed replication protocol, but over the years, we have found some extremely subtle edge cases which can, in the worst case, lead to data loss. We fixed the cases we were aware of in version 0.11.0.0, but shortly after that, another edge case popped up and then another. Clearly we needed a better approach to verify the correctness of the protocol. What we found is Leslie Lamport’s specification language TLA+.

In this talk I will discuss how we have stepped up our testing methodology in Apache Kafka to include formal specification and model checking using TLA+. I will cover the following:

1. How Kafka replication works

2. What weaknesses we have found over the years

3. How these problems have been fixed

4. How we have used TLA+ to verify the fixed protocol.

This talk will give you a deeper understanding of Kafka replication internals and its semantics. The replication protocol is a great case study in the complex behavior of distributed systems. By studying the faults and how they were fixed, you will have more insight into the kinds of problems that may lurk in your own designs. You will also learn a little bit of TLA+ and how it can be used to verify distributed algorithms.

Hardening Kafka Replication confluent

232 slides•5.7K views

(Jason Gustafson, Confluent) Kafka Summit SF 2018

Kafka has a well-designed replication protocol, but over the years, we have found some extremely subtle edge cases which can, in the worst case, lead to data loss. We fixed the cases we were aware of in version 0.11.0.0, but shortly after that, another edge case popped up and then another. Clearly we needed a better approach to verify the correctness of the protocol. What we found is Leslie Lamport’s specification language TLA+.

In this talk I will discuss how we have stepped up our testing methodology in Apache Kafka to include formal specification and model checking using TLA+. I will cover the following:

1. How Kafka replication works

2. What weaknesses we have found over the years

3. How these problems have been fixed

4. How we have used TLA+ to verify the fixed protocol.

This talk will give you a deeper understanding of Kafka replication internals and its semantics. The replication protocol is a great case study in the complex behavior of distributed systems. By studying the faults and how they were fixed, you will have more insight into the kinds of problems that may lurk in your own designs. You will also learn a little bit of TLA+ and how it can be used to verify distributed algorithms.SSD Deployment Strategies for MySQL by Yoshinori Matsunobu, has 52 slides with 18925 views.Slides for MySQL Conference & Expo 2010: http://en.oreilly.com/mysql2010/public/schedule/detail/13519

SSD Deployment Strategies for MySQLYoshinori Matsunobu

52 slides•18.9K views

Slides for MySQL Conference & Expo 2010: http://en.oreilly.com/mysql2010/public/schedule/detail/13519Apache Spark Architecture by Alexey Grishchenko, has 114 slides with 80638 views.This is the presentation I made on JavaDay Kiev 2015 regarding the architecture of Apache Spark. It covers the memory model, the shuffle implementations, data frames and some other high-level staff and can be used as an introduction to Apache Spark

Apache Spark ArchitectureAlexey Grishchenko

114 slides•80.6K views

This is the presentation I made on JavaDay Kiev 2015 regarding the architecture of Apache Spark. It covers the memory model, the shuffle implementations, data frames and some other high-level staff and can be used as an introduction to Apache SparkInteractive Analytics in Human Time by DataWorks Summit, has 34 slides with 6295 views.This document discusses Yahoo's approach to interactive analytics on human timescales for their large-scale advertising data warehouse. It describes how they ingest billions of daily events and terabytes of data, transform and store it using technologies like Druid and Storm, and perform real-time analytics like computing overlaps between user groups in under a minute. It also compares their "instant overlap" technique using feature sequences and bitmaps to existing approaches like exact computation and sketches.

Interactive Analytics in Human TimeDataWorks Summit

34 slides•6.3K views

This document discusses Yahoo's approach to interactive analytics on human timescales for their large-scale advertising data warehouse. It describes how they ingest billions of daily events and terabytes of data, transform and store it using technologies like Druid and Storm, and perform real-time analytics like computing overlaps between user groups in under a minute. It also compares their "instant overlap" technique using feature sequences and bitmaps to existing approaches like exact computation and sketches.Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud by Noritaka Sekiyama, has 60 slides with 34610 views.This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama

60 slides•34.6K views

Viewers also liked (19)

Scalable Event Analytics with MongoDB & Ruby on Rails by Jared Rosoff, has 45 slides with 28384 views.The document discusses scaling event analytics applications using Ruby on Rails and MongoDB. It describes how the author's startup initially used a standard Rails architecture with MySQL, but ran into performance bottlenecks. It then explores solutions like replication, sharding, key-value stores and Hadoop, but notes the development challenges with each approach. The document concludes that using MongoDB provided scalable writes, flexible reporting and the ability to keep the application as "just a Rails app". MongoDB's sharding allows scaling to high concurrency levels with increased storage and transaction capacity across shards.

Scalable Event Analytics with MongoDB & Ruby on RailsJared Rosoff

45 slides•28.4K views

The document discusses scaling event analytics applications using Ruby on Rails and MongoDB. It describes how the author's startup initially used a standard Rails architecture with MySQL, but ran into performance bottlenecks. It then explores solutions like replication, sharding, key-value stores and Hadoop, but notes the development challenges with each approach. The document concludes that using MongoDB provided scalable writes, flexible reporting and the ability to keep the application as "just a Rails app". MongoDB's sharding allows scaling to high concurrency levels with increased storage and transaction capacity across shards.Analyzing Big Data at Twitter (Web 2.0 Expo NYC Sep 2010) by Kevin Weil, has 75 slides with 5465 views.A look at Twitter's data lifecycle, some of the tools we use to handle big data, and some of the questions we answer from our data.

Analyzing Big Data at Twitter (Web 2.0 Expo NYC Sep 2010)Kevin Weil

75 slides•5.5K views

A look at Twitter's data lifecycle, some of the tools we use to handle big data, and some of the questions we answer from our data.Hadoop and pig at twitter (oscon 2010) by Kevin Weil, has 35 slides with 9529 views.This document summarizes Kevin Weil's presentation on Hadoop and Pig at Twitter. Weil discusses how Twitter uses Hadoop and Pig to analyze massive amounts of user data, including tweets. He explains how Pig allows for more concise and readable analytics jobs compared to raw MapReduce. Weil also provides examples of how Twitter builds data-driven products and services using these tools, such as their People Search feature.

Hadoop and pig at twitter (oscon 2010)Kevin Weil

35 slides•9.5K views

This document summarizes Kevin Weil's presentation on Hadoop and Pig at Twitter. Weil discusses how Twitter uses Hadoop and Pig to analyze massive amounts of user data, including tweets. He explains how Pig allows for more concise and readable analytics jobs compared to raw MapReduce. Weil also provides examples of how Twitter builds data-driven products and services using these tools, such as their People Search feature.NoSQL at Twitter (NoSQL EU 2010) by Kevin Weil, has 156 slides with 94421 views.A discussion of the different NoSQL-style datastores in use at Twitter, including Hadoop (with Pig for analysis), HBase, Cassandra, and FlockDB.

NoSQL at Twitter (NoSQL EU 2010)Kevin Weil

156 slides•94.4K views

A discussion of the different NoSQL-style datastores in use at Twitter, including Hadoop (with Pig for analysis), HBase, Cassandra, and FlockDB.Hadoop, Pig, and Twitter (NoSQL East 2009) by Kevin Weil, has 58 slides with 143344 views.A talk on the use of Hadoop and Pig inside Twitter, focusing on the flexibility and simplicity of Pig, and the benefits of that for solving real-world big data problems.

Hadoop, Pig, and Twitter (NoSQL East 2009)Kevin Weil

58 slides•143.3K views

A talk on the use of Hadoop and Pig inside Twitter, focusing on the flexibility and simplicity of Pig, and the benefits of that for solving real-world big data problems.Protocol Buffers and Hadoop at Twitter by Kevin Weil, has 49 slides with 41932 views.How Twitter uses Hadoop and Protocol Buffers for efficient, flexible data storage and fast MapReduce/Pig jobs.

Protocol Buffers and Hadoop at TwitterKevin Weil

49 slides•41.9K views

How Twitter uses Hadoop and Protocol Buffers for efficient, flexible data storage and fast MapReduce/Pig jobs.MongoDB at the energy frontier by Valentin Kuznetsov, has 33 slides with 9516 views.The document discusses MongoDB's use in the CMS experiment at CERN. MongoDB is used as the backend for CMS's Data Aggregation System (DAS), which acts as an intelligent cache to query distributed data services. DAS translates user queries, retrieves data from multiple services, aggregates the results, and returns consolidated responses. This architecture allows users to access different data without knowledge of the underlying services. MongoDB provides a flexible schema and fast I/O that make it suitable for caching distributed data and executing complex queries in DAS.

MongoDB at the energy frontierValentin Kuznetsov

33 slides•9.5K views

The document discusses MongoDB's use in the CMS experiment at CERN. MongoDB is used as the backend for CMS's Data Aggregation System (DAS), which acts as an intelligent cache to query distributed data services. DAS translates user queries, retrieves data from multiple services, aggregates the results, and returns consolidated responses. This architecture allows users to access different data without knowledge of the underlying services. MongoDB provides a flexible schema and fast I/O that make it suitable for caching distributed data and executing complex queries in DAS.Hadoop summit 2010 frameworks panel elephant bird by Kevin Weil, has 11 slides with 2078 views.Elephant Bird is a framework for working with structured data within Hadoop ecosystems. It allows users to specify a flexible, forward-backward compatible, self-documenting data schema and then generates code for input/output formats, Hadoop Writables, and Pig load/store functions. This reduces the amount of code needed and allows users to focus on their data. Elephant Bird underlies 20,000 Hadoop jobs per day at Twitter.

Hadoop summit 2010 frameworks panel elephant birdKevin Weil

11 slides•2.1K views

Elephant Bird is a framework for working with structured data within Hadoop ecosystems. It allows users to specify a flexible, forward-backward compatible, self-documenting data schema and then generates code for input/output formats, Hadoop Writables, and Pig load/store functions. This reduces the amount of code needed and allows users to focus on their data. Elephant Bird underlies 20,000 Hadoop jobs per day at Twitter.Hadoop at Twitter (Hadoop Summit 2010) by Kevin Weil, has 46 slides with 7148 views.Kevin Weil presented on Hadoop at Twitter. He discussed Twitter's data lifecycle including data input via Scribe and Crane, storage in HDFS and HBase, analysis using Pig and Oink, and data products like Birdbrain. He described how tools like Scribe, Crane, Elephant Bird, Pig, and HBase were developed and used at Twitter to handle large volumes of log and tabular data at petabyte scale.

Hadoop at Twitter (Hadoop Summit 2010)Kevin Weil

46 slides•7.1K views

Kevin Weil presented on Hadoop at Twitter. He discussed Twitter's data lifecycle including data input via Scribe and Crane, storage in HDFS and HBase, analysis using Pig and Oink, and data products like Birdbrain. He described how tools like Scribe, Crane, Elephant Bird, Pig, and HBase were developed and used at Twitter to handle large volumes of log and tabular data at petabyte scale.Big Data at Twitter, Chirp 2010 by Kevin Weil, has 71 slides with 14051 views.Slides from my talk on collecting, storing, and analyzing big data at Twitter for Chirp Hack Day at Twitter's Chirp conference.

Big Data at Twitter, Chirp 2010Kevin Weil

71 slides•14.1K views

Slides from my talk on collecting, storing, and analyzing big data at Twitter for Chirp Hack Day at Twitter's Chirp conference.How to Start Using Analytics Without Feeling Overwhelmed by Kissmetrics on SlideShare, has 54 slides with 3405 views.This document provides guidance on using analytics without feeling overwhelmed. It outlines 5 principles for finding valuable analytics insights: 1) Avoid vanity metrics that don't impact business, 2) Focus on customers, 3) Track whether customers return, 4) Track your customer funnel, and 5) Establish your own benchmarks for comparison over time. It also provides 4 tactics for using Google Analytics, including setting up goals and funnels to track customer actions and conversions. Customer analytics is recommended for a more complete view of customers rather than anonymous traffic data alone.

How to Start Using Analytics Without Feeling OverwhelmedKissmetrics on SlideShare

54 slides•3.4K views

This document provides guidance on using analytics without feeling overwhelmed. It outlines 5 principles for finding valuable analytics insights: 1) Avoid vanity metrics that don't impact business, 2) Focus on customers, 3) Track whether customers return, 4) Track your customer funnel, and 5) Establish your own benchmarks for comparison over time. It also provides 4 tactics for using Google Analytics, including setting up goals and funnels to track customer actions and conversions. Customer analytics is recommended for a more complete view of customers rather than anonymous traffic data alone.Email Optimization: A discussion about how A/B testing generated $500 million... by MarketingSherpa, has 65 slides with 5009 views.In this webinar you’ll hear from Amelia Showalter, who headed the email and digital analytics teams for President Barack Obama’s 2012 presidential campaign.

They’ll discuss how they maintained a breakneck testing schedule for its massive email program with Daniel Burstein, Director of Editorial Content, MECLABS. Take an inside look at the campaign headquarters, detailing the thrilling successes and informative failures Obama for America encountered at the cutting edge of digital politics.

In this session, you'll learn:

• Why subject lines mattered so much, and the back stories behind some of the most memorable ones

• Why the prettiest email isn’t always the best email

• How a free bumper sticker offer can pay for itself many times over

• The most important takeaway from all those tests and trials

Email Optimization: A discussion about how A/B testing generated $500 million...MarketingSherpa

65 slides•5K views

In this webinar you’ll hear from Amelia Showalter, who headed the email and digital analytics teams for President Barack Obama’s 2012 presidential campaign.

They’ll discuss how they maintained a breakneck testing schedule for its massive email program with Daniel Burstein, Director of Editorial Content, MECLABS. Take an inside look at the campaign headquarters, detailing the thrilling successes and informative failures Obama for America encountered at the cutting edge of digital politics.

In this session, you'll learn:

• Why subject lines mattered so much, and the back stories behind some of the most memorable ones

• Why the prettiest email isn’t always the best email

• How a free bumper sticker offer can pay for itself many times over

• The most important takeaway from all those tests and trials

Human resonance for leaders pap 2015 by Bernhard K.F. Pelzer, has 28 slides with 2451 views.Leading in the new world of work – Human Resonance

With so many models and approaches – from large firms to business schools to boutiques – it is hard for companies to architect the tailored yet integrated experiences they need.

In our “Human Resonance” approach we offer what is needed.

Next level practice instead of best practice!