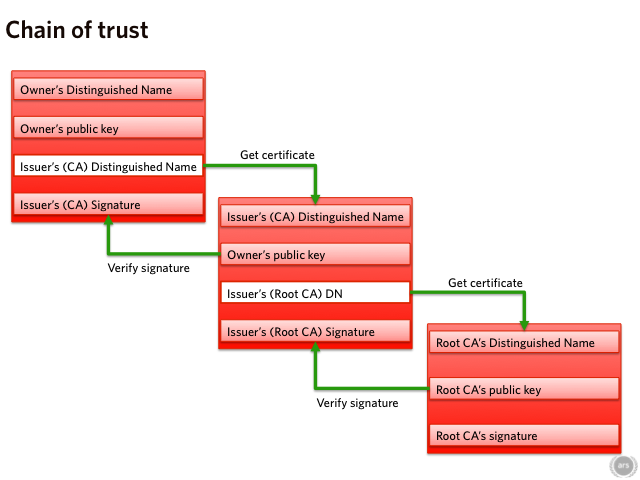

Recently at Ars we've had a couple of discussions about the use of HTTPS—that is, HTTP secured using SSL or TLS—for every website, as a way of keeping sensitive information out of reach of eavesdroppers and ensuring privacy. That's definitely a good thing, but it has a flaw: it requires HTTPS to actually be effective at protecting privacy. Recent goings on at Certificate Authority (CA) Comodo provide compelling evidence that such trust is misplaced.

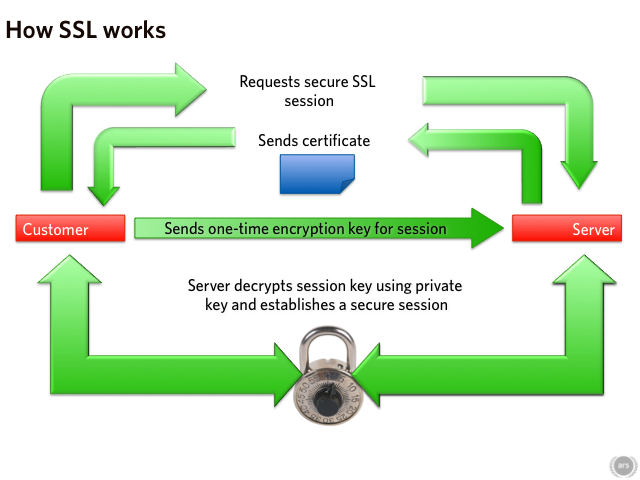

There are two interrelated aspects to SSL. The first is encryption—ensuring that nobody can understand the communication between a client and a server—and the second is authentication—proving to the client that it is actually communicating with the server it thinks it's communicating with. When a client first connects to an HTTPS server, both parties have a bit of a problem. They would like to encrypt the information they send each other, but to do this, they both need to be using the same encryption key. Obviously, they cannot just send the key to each other, because anyone listening in on the connection will be able to watch them do so, and use the key to decrypt the communication themselves. Fortunately, clever mathematics allows both parties to share an encryption key without it being disclosed to any eavesdroppers.

Defeating the man-in-the-middle

But what if instead of merely eavesdropping, the malicious party actually interferes with the connection, placing itself between the client and the server, intercepting everything sent between the two, known as a man-in-the-middle (MITM) attack. This would be a big problem. The MITM could act as the server (as far as the client was concerned) and the client (as far as the server were concerned), sharing one key with the client and another with the server. He could then decrypt anything the client said, examine it, and then re-encrypt it and send it to the server, and neither side would be any the wiser.

Loading comments...

Loading comments...